Why Your Data Stack Won't Last - And How To Build Data Infrastructure That Will

As a consultant, I have been called in to review and, in many cases, replace dozens of half-finished, abandoned, and sometimes forgotten data infrastructure projects.

The data infrastructure in a few cases may just need a little tweaking to operate effectively, but other times the project is either so incomplete or so lacking in a central design that the best thing to do is replace the old system.

Trust me, I’d love it if I could come into a project and simply change a few lines of code, and then everything would just work. However, so many projects are filled with unclear design decisions or resume-driven development that were never rooted in good planning.

Of course, business stakeholders may have also push to get things done quickly. Forcing data teams to take on tech debt that will never be fixed. Don’t get me wrong, you want to get things done and move projects forward. But taking on technical debt is a decision that needs to be made intentionally. Otherwise, like in resume driven development, your data infrastructure might disappear.

This begs the question.

How do you ensure the data infrastructure you’re building doesn’t get replaced as soon as you leave in the future?

In this article I wanted to dive into the problems I often come into that require me to replace the current data infrastructure and how you can avoid it.

So let’s dive in.

Resume And Vendor Driven Development

When you design a new system, it shouldn’t be driven by a vendor or your resume. Don’t get me wrong, if you have a solid, reliable base for your data infrastructure, testing out the occasional POC with new tools isn’t a bad idea.

The problem I find is that many data teams get thrown into having to design data infrastructure with little experience actually setting up one in the past. Don’t get me wrong, we all start with no experience. But it can be difficult to assess what all the nuances of different tooling and designs can be.

In turn, many of them will look up articles to see what some of the big tech companies are doing or what a vendor might recommend. This can lead to design choices that likely won’t fit your use case.

One great point that was made by an ex-manager in analytics I was just talking to was that it can be easy to pick an ETL or data pipeline tool that sounds good on paper. The account executive and sales engineer will put together the perfect pitch and POC.

But eight months into a project there is a key blocker that the tool either didn’t disclose or could only be found out with experience. Honestly, the vendor themselves might have not even known the limitation.

A similar point can be made when you’re trying to design your overall architecture. If you try to follow what a large tech company is doing, it might be a bad fit for your company.

You need to be very intentional with your core design.

How To Avoid Resume-Driven Development

Talk to people who have used the vendor - Most data engineers are happy to share their thoughts on various tooling. Thus, try to find a mix of different data engineers and architects that you can ask about their experience with the tooling. Some will tell you flat out, don’t use said tool while others will sing the praises of some tools.

Read agnostic sources - This can be research papers, non-affiliated consultants, books, etc. Just read everything with a grain of salt!

Start with the business goals - It can be tempting to find the right job for the tool. After all, we all want to put the current “it” tool on our resume. However, when you do start looking to develop data infrastructure, your first goal should be to work with the business to understand what they actually want their outcomes to be. Then you will want to start to take a measured approach to finding the right tools or designing the right system.

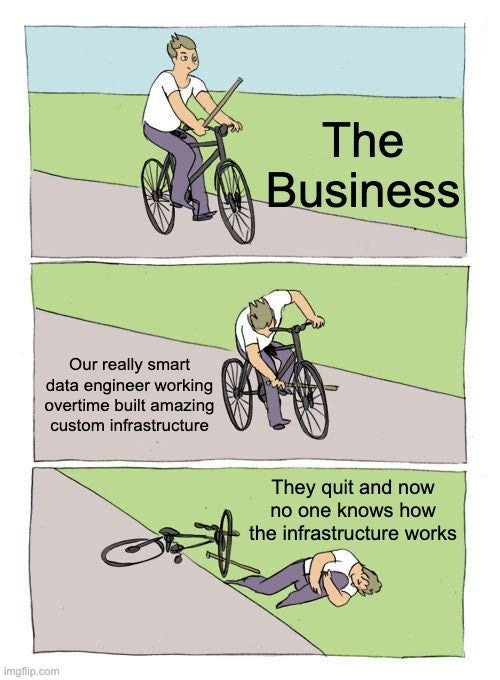

Key Person Dependency Issues

One of the constant issues many companies face, especially the smaller ones, is a key person dependency issue. This manifests as either a single key developer or perhaps a small team that all decide to develop the data infrastructure that will be difficult for future teams to maintain or even understand what is going on.

This could be because the prior team didn’t document their design, or only documented it for themselves.

Sure, they have built data infrastructure that works and solved the businesses current problems. They maintain it, and no one asks questions.

Perhaps no one realizes that one of the team members has to wake up at 6 AM every morning to check and ensure all the reports and tables have been created.

That all works until the day they leave.

Then the data infrastructure may hold for a little bit, but eventually, problems arise. Perhaps the company has hired a replacement data team but they may eventually hit the limit of what they can actually support.

This was a major issue for many of my past clients. Around ⅓ over the past few years reached out for my services due to this.

In one example I came in and found a data vault project that wasn’t being used. It actually had pretty good documentation. However, the team had taken so long and hadn’t fully completed the project which led to their dismissal. What’s worse is because the project was never completed several analysts had to work weekends to create key reporting for the board.

For this specific case, we massively simplified what they had built and built a simpler data warehouse that a smaller team could maintain.

And of course made sure the reporting was automated so the analysts could stop working weekends.

How To Avoid Key Dependency Issues

Documentation - Make sure the documentation you create isn’t just written for you, but for someone who has never seen your code before. In particular, highlight key decision points starting with why you may have picked certain tooling, why you may have implemented logic in certain places, etc.

Cross-training(where it makes sense) - For larger teams consider cross-training. This can also include semi-technical employees from other teams. I am not saying they will or should replace the engineers. But from my perspective, as a consultant, it's been great to walk in and talk to marketing analysts or operational analysts who have some bearing on why the system was developed the way it was.

Building Data Infrastructure Without The Business In Mind

In several of the projects I have come into, the communication between the stakeholders and the data teams had either broken down or never existed.

The data team had gone off for several months without ever really delivering anything that could be reviewed by the business. Then by the time stakeholders saw the results it just wasn’t what they were looking for.

Your stakeholders need to be brought into what you’re building. Let me give an example. One of my clients I came into work with had a data warehouse. It technically functioned.

But within a few days, I realized no one was using the 2-3 dashboards that were being supported by the data warehouse.

Why?

Well, a combination of reasons but one was it was never actually built with the stakeholders.

So they didn’t find the end results helpful.

This section’s topic is likely what kicked off my inspiration for this article. There is so much data infrastructure that is built in a silo without being tied to the business. All of which eventually leads to failed projects and data infrastructure not lasting.

How To Build With the Business

Start with business outcomes - I already said something similar above. But it’s always worth repeating. You don’t want to build infrastructure for infrastructure’s sake. So before starting a project, be clear what you’re trying to do in terms of improving the business.

Keep the business in the loop - It can be very tempting to not have meetings reviewing your dashboards or analyzing the metrics. After all, you have to get work done. The problem arises when at the end of your 3-6 months of development you finally show off your end-product and it wasn’t even close to what the stakeholders wanted.

Building Purposeful Infrastructure

Your data infrastructure doesn’t have to be a mess so to speak, or perhaps I should just say it doesn’t have to be difficult for a future developer to understand what is going on under the hood. My best experience in learning how to program was when I worked for a company and opened up their code base and I understood what was going on.

The abstractions weren’t so deep that I couldn’t trace them, and there were clear standards implemented in naming conventions.

Honestly, it was just a lot of little things done right.

A well-thought-out and simple system.

Naming that people can understand.

And documentation that isn’t filled with acronyms and assumptions.

That can help ensure that years into the future, your data infrastructure is still around.

With that, I want to say thanks for reading!

Join My Data Engineering And Data Science Discord

If you’re looking to talk more about data engineering, data science, breaking into your first job, and finding other like minded data specialists. Then you should join the Seattle Data Guy discord!

We are now well over 7000 members!

Join My Technical Consultants Community

If you’re a data consultant or considering becoming one then you should join the Technical Freelancer Community! We have nearly 700 members!

You’ll find plenty of free resources you can access to expedite your journey as a technical consultant as well as be able to talk to other consultants about questions you may have!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Enabling Security for Hadoop Data Lake on Google Cloud Storage

As part of Uber’s cloud journey, we are migrating the on-prem Apache Hadoop® based data lake along with analytical and machine learning workloads to GCP™ infrastructure platform. The strategy involves replacing the storage layer, HDFS, with GCS Object Storage (PaaS) and running the rest of the tech stack (YARN, Apache Hive™, Apache Spark™, Presto®, etc.) on GCP Compute Engine (IaaS).

A typical cloud adoption strategy involves using cloud-native components and integrating existing IAM with cloud IAM (e.g., federation, identity sync, etc.) (Figure 1.ii). Our strategy is somewhat unique: we continue to leverage part of the existing stack as is (except HDFS) and integrate with GCS…

Organizational Anti-Patterns For Data Teams

As companies continue to implement data and automated processes into their workflows and strategies, they unavoidably set up processes that, in the short run, help them develop faster but often have negative impacts in the long run.

End Of Day 138

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

Acute observation - Resume And Vendor Driven Development

This is pervasive across the tech industry and in IT departments. Good tips on avoiding, I do feel though their needs to be an executive coaching to effectively deal this.

Crafting a compelling value proposition can propel a new business model to scale up customers and create a lasting competitive advantage.

It all starts with mastering the key customers.