Data Sharing in the Real World: Why SFTP Remains Essential for Companies

Data Engineering Basics

Every day, companies share data sets of users, patient claims, financial transactions, and more with each other.

Most people might assume this would be via API. However, companies have been sharing data for decades using CSVs, TSVs, positional files, and other formats you might not be familiar with. Not via API, but SFTP.

I know I wouldn’t have guessed that’s how companies send data back and forth when I was in school.

If you’ve been in the industry for a while, you’ve probably come across automated SFTP jobs that do just that. You’ve also likely had to encrypt or decrypt a CSV and had to interpret a schema file with parsing instructions that—somehow—are always a bit off the first time.

Now, sure, we all want to build real-time systems that use LLMs and other flashy new tools and solutions. Sometimes, that’s not what is called for.

After all, companies of all sizes—even tech giants like Facebook and Airbnb—still use SFTP to share critical information for analytical purposes(as well as operational). So, let’s dig into what SFTP is and how you will likely work with it.

What Is SFTP?

For anyone unfamiliar, here’s a quick overview: SFTP stands for Secure File Transfer Protocol, a method companies use to safely send and receive files over the internet. Think of it as a secure digital mail service, where data files—like documents or CSVs—are the "packages."

SFTP is commonly used to transfer data files such as CSVs, TSVs, and pipe-delimited files to a server. From there, a job triggered by a scheduler or specific event retrieves the file and loads it into an external or internal database.

Why Do Companies Use SFTP?

Extracting data from APIs isn’t always the best option. Setting up an API takes time and resources to configure and maintain, while a basic SFTP job is considerably more straightforward to get up and running.

Here are just a few examples where I have seen SFTP jobs set up:

Aggregating and analyzing data and creating reporting on data such as healthcare claims.

Partnering with another organization to deliver a service to customers.

Coordinating with a third party to send physical mail (more common than you might think).

Sending payroll files from stores or franchises to a centralized payroll system.

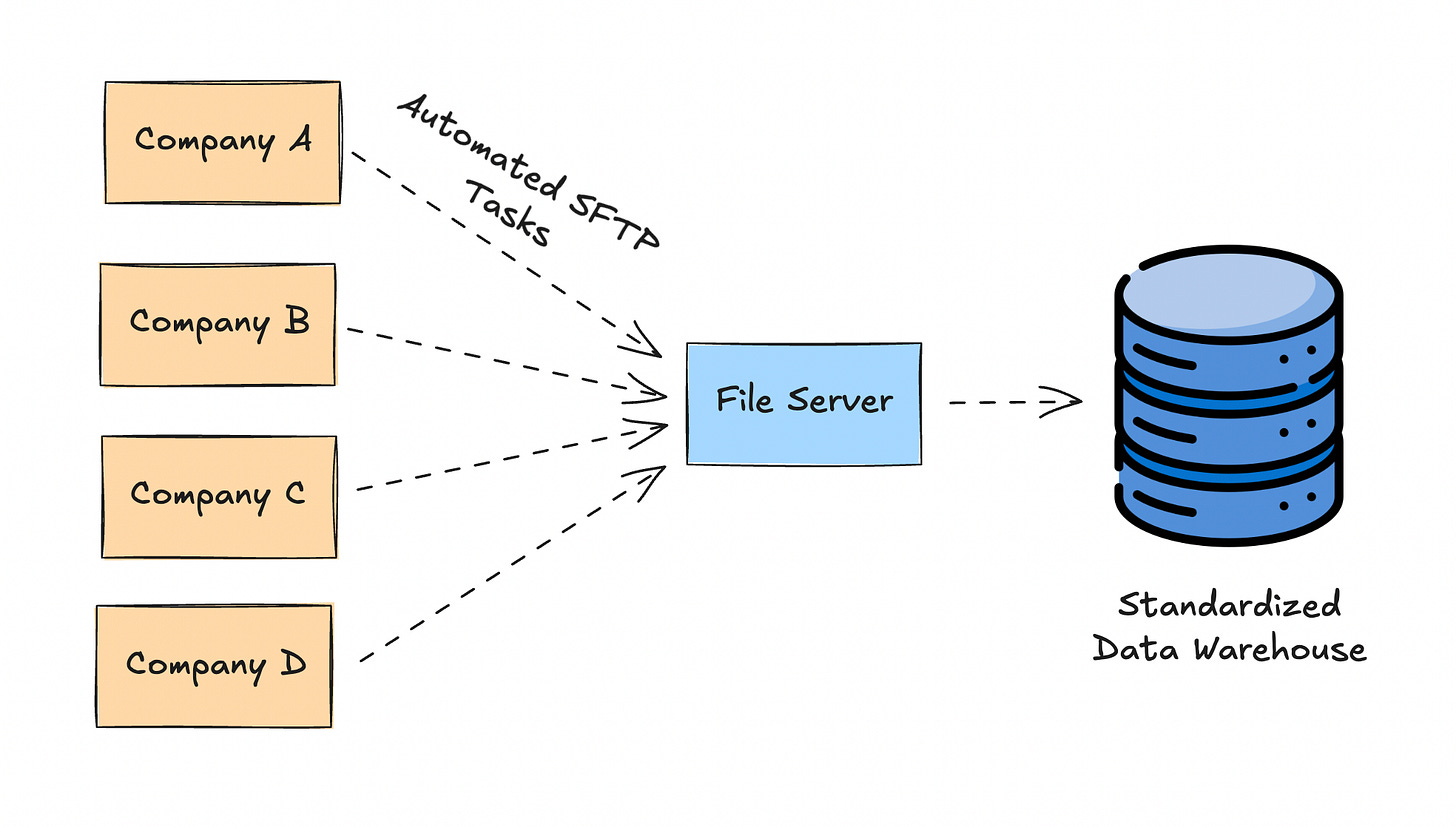

In fact, I have now worked with several companies that use SFTP because they centralize similar data sets from dozens, if not hundreds, of companies to create reporting and analytics. Essentially, they act as a central hub, as pictured below.

From there, the various files from the companies would be processed into their similar entities. For example, insurance claims data is often broken down into patients, eligibility, claims and RX at the very least. This allows you to start to build data products that range from algorithms to dashboards.

One of the points that is implicit here is that unlike the company sending the data, the aggregator has the benefit of now having multiple companies data on the same entities. Meaning you can provide a very unique value add which is bench marking. Because you can now help your customers of your data product better understand where they stand compared to their competition(assuming they are open to their data being used that way of course).

All of which starts with getting the data via SFTP.

But what do you need to consider when setting up SFTP jobs?

Setting Up Your SFTP Job

Extracting data from files via SFTP isn’t terribly complex. In fact, some might consider it boring and not as exciting as building data systems that can manage exabytes of data. But there are plenty of companies that will need this work done, regardless of whether you like it or not.

Which is why I wanted to write about this topic.

So, to securely manage SFTP jobs, there are a few essential elements to keep in mind—starting with user authentication and encryption.

Authentication

Just like with APIs, user authentication is essential when working with SFTP. Here are two common methods (along with whitelisting):

SSH Keys: SFTP typically relies on SSH keys for user authentication. Instead of using a password, you set up a public-private key pair: the server holds the public key, while the client keeps the private key securely. When you need to connect to the server you use the private key to authenticate.

Password Authentication: This is exactly what you’d think. A username and a password.

File Encryption

Beyond authentication, many files transferred via SFTP are also encrypted using PGP (Pretty Good Privacy) Encryption. This is especially true when data has any form of PII, PHI or other forms of data that needs to remain secure.

There are a few ways a file might be encrypted, so it’s best to establish a standard approach with your external partners. Otherwise, you may find yourself juggling multiple encryption methods, which can be tedious when you write out your SFTP jobs (as I learned firsthand at Facebook).

Some companies might use a key pair approach, others might use a password and still others might use a combination. Here are a few explanations of each.

PGP Key Pair Encryption

This is the most common PGP method I’ve encountered. I’ve used it when working with insurance claims data, HR data, telecommunications data, and more. Each of these had sensitive information that benefited from being encrypted on transfer.

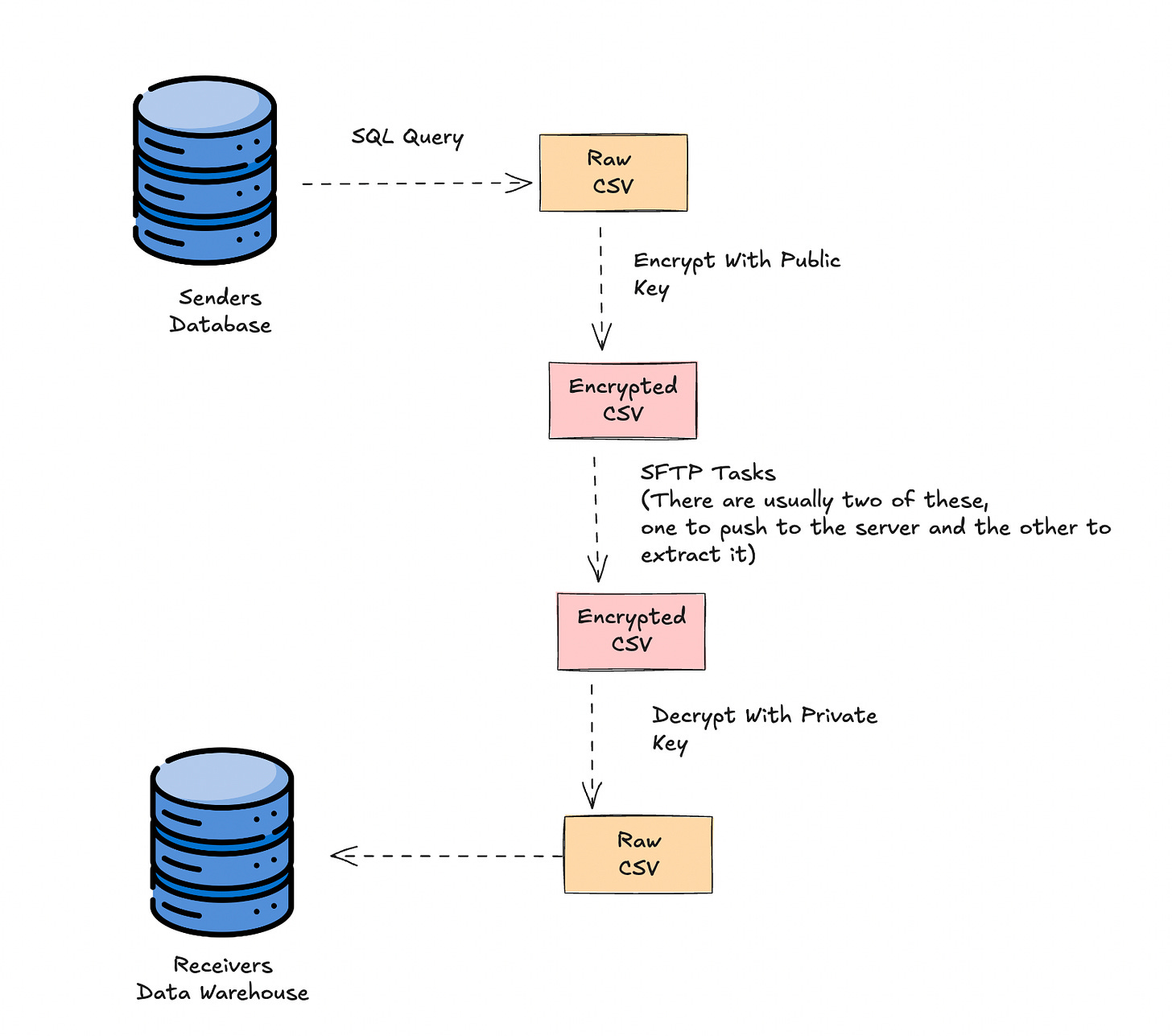

PGP key pair encryption does this by using a public and private key for secure communication: the sender encrypts the file or message using the recipient's public key, and the recipient decrypts it with their private key. This also means you will have to learn how to create a public key pair.

PGP Signatures (Signing Process)

PGP can also verify the authenticity and integrity of a file or message through digital signatures. This means the file will be checked to ensure you actually know who sent it. In this process, the sender "signs" a message or file using their private key, creating proof of origin. The recipient then uses the sender's public key to verify the signature, ensuring that the message or file hasn’t been altered and confirming the sender’s identity. I’ve used this for companies that were very concerned about possibly having a bad actor gain the ssh key or password and username information and send a file over that could contain malware or other malicious scripts or injections. Especially since the processes to extract the data are automated, most people likely don’t go back and check the hundreds of files they are receiving to see if they are malicious.

PGP Encryption + Signature (Combined Process)

Now, not finally in terms of methods that exist, but for the last example I’ll give, PGP can combine encryption and signing in a single process for added security. This means the message or file is both encrypted for confidentiality and signed for authenticity—a practice adopted by several teams I’ve worked with.

Here’s how it works: first, the file or message is signed with the sender’s private key and then encrypted with the recipient’s public key. The recipient decrypts the message with their private key and verifies the signature using the sender’s public key.

Once you’ve got these two basics down, you can start considering the overall process and how you can ensure the data being processed is accurate.

Schema, Header, and Aggregate Files

When setting up an SFTP job, one of the first requirements is often a schema file. This file outlines the expected fields and their order, ensuring both sides have a common structure. Typically, companies prefer to define the schema to streamline integration on their end.

Especially if you are a data analytics company, the last thing you want to deal with is 100 different schemas that you now have to map. It doesn’t mean it doesn’t happen, of course. You should also set up a process where clear communication happens before said schema changes(cries in data engineering).

Once the schema file is agreed upon, there are a few additional files involved that might not have to do with the data, such as the header and aggregate files.

Header: Sometimes, a header will exist in the data file, though this isn’t always the case. When present, the header allows the receiving party to check the validity of the schema. Because, guess what? On more than one occasion, another company changed the schema of the file they were sending me months after we had agreed to the schema.

Aggregate File Checks: Less common but useful, an aggregate file is sometimes provided to double-check the integrity of the data in your main file. The file often includes an aggregate figure that describes the main file—like the total number of rows, total dollars spent, or unique users. This kind of acts like a Checksum, although it's usually a separate query that was run for the raw file.

Will We Ever Replace SFTP With Data Sharing

There are likely tens of thousands of SFTP jobs that are still running today. Who am I kidding, there are probably even more than that.

However, a concept like data sharing(which tools like Snowflake and Databricks allow for) could help nullify the need to create so many data copies—making both APIs and SFTP less necessary.

With data sharing, companies could access shared datasets using just a few SQL commands, eliminating the heavy lifting involved in SFTP or API setups. That said, this shift is likely still 5-10 years away from becoming standard.

How To Send Data Via SFTP

Until then, let’s look at what a basic script would look like to interact with an SFTP.

import os

import paramiko

import gnupg

# Setup for SFTP and PGP

SFTP_HOST = 'your_sftp_host'

SFTP_USERNAME = 'your_sftp_username'

SFTP_PASSWORD = 'your_sftp_password' # Use None if using SSH key authentication

SFTP_PRIVATE_KEY = 'path/to/your/private/key' # Set to None if using password

SFTP_REMOTE_PATH = '/path/to/your/encrypted/file.gpg'

LOCAL_FILE_PATH = 'downloaded_file.gpg'

DECRYPTED_FILE_PATH = 'decrypted_file.txt'

PGP_PASSPHRASE = 'your_private_key_passphrase'

PGP_PRIVATE_KEY = 'path/to/your/private/key' # If not already imported into GPG

gpg = gnupg.GPG()

# If the private key is not imported, import it

with open(PGP_PRIVATE_KEY, 'r') as key_file:

gpg.import_keys(key_file.read())

Connect to the SFTP server and download the file using paramiko

def download_file_from_sftp():

transport = paramiko.Transport((SFTP_HOST, 22))

# Authentication: Use either password or SSH private key

if SFTP_PASSWORD:

print("Authenticating with password...")

transport.connect(username=SFTP_USERNAME, password=SFTP_PASSWORD)

else:

print("Authenticating with SSH private key...")

private_key = paramiko.RSAKey.from_private_key_file(SFTP_PRIVATE_KEY)

transport.connect(username=SFTP_USERNAME, pkey=private_key)

# Start the SFTP session

sftp = paramiko.SFTPClient.from_transport(transport)

print(f"Connected to SFTP server: {SFTP_HOST}")

# Download the remote file

sftp.get(SFTP_REMOTE_PATH, LOCAL_FILE_PATH)

print(f"Downloaded file: {SFTP_REMOTE_PATH} to {LOCAL_FILE_PATH}")

# Close the SFTP session and connection

sftp.close()

transport.close()

#Decrypt the file using PGP key pair

def decrypt_pgp_file():

with open(LOCAL_FILE_PATH, 'rb') as encrypted_file:

decrypted_data = gpg.decrypt_file(encrypted_file, passphrase=PGP_PASSPHRASE)

if decrypted_data.ok:

with open(DECRYPTED_FILE_PATH, 'w') as decrypted_file:

decrypted_file.write(str(decrypted_data))

print(f"File successfully decrypted to {DECRYPTED_FILE_PATH}")

else:

print(f"Failed to decrypt file. Status: {decrypted_data.status}")

# Main flow

if __name__ == '__main__':

# Download the encrypted file from SFTP

download_file_from_sftp()

# Decrypt the downloaded file

decrypt_pgp_file()

SFTP Isn’t Going Anywhere

The first time I worked with SFTP wasn’t in school but at my second job. Before I knew it, I was managing similar workflows at Facebook and with 3-4 different clients.

While it may not be the most exciting work, chances are you’ll run into SFTP at some point, and you might even need to create a generic script that can handle the different authentication and decryption methods. Sure, there are some out-of-the-box options, but having a custom script gives you more control.

With that, thank you for reading!

Join My Data Engineering And Data Science Discord

If you’re looking to talk more about data engineering, data science, breaking into your first job, and finding other like minded data specialists. Then you should join the Seattle Data Guy discord!

We are now well over 7000 members!

Join My Technical Consultants Community

If you’re a data consultant or considering becoming one then you should join the Technical Freelancer Community! We have over 700 members!

You’ll find plenty of free resources you can access to expedite your journey as a technical consultant as well as be able to talk to other consultants about questions you may have!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Back To The Basics With SQL: Understanding Hash, Merge, and Nested Joins

If you’ve used SQL, you’ve likely encountered the basic joins.

7 essential Mage features for data engineers in 2024

Data engineering continues to evolve causing developers to experience an array of old and new problems. Mage is a comprehensive data engineering platform that provides many solutions to help data engineers create pipelines rather than worry about managing infrastructure, handling complex integrations, and ensuring scalability. See the 7 essential features listed below and start developing pipelines.

End Of Day 149

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

Glad I haven’t had to use SFTP for a while.

this is really great read thank you for sharing