Back To The Basics With SQL: Understanding Hash, Merge, and Nested Joins

If you’ve used SQL, you’ve likely encountered the basic joins.

Inner join, left join, right join, full join and cross join.

Here’s the thing: those joins can actually be translated into very different joins when you execute a query.

We touched on this in a previous article—at least, the fact that when queries run, they go through a process of creating logical query plans that eventually need to be physically implemented.

Several join types may be selected during this implementation depending on your query engine.

So in this article we will be covering three of those joins and how they work.

Why is it important to understand how joins work?

When it comes to performance, understanding which joins are being used can help you improve your query’s efficiency. For example, you may need an index to avoid using a nested join(or at the very least improving the efficiency of a nested join) on a table where the row counts are too large.

This can in turn lead to reduced costs, reduced run times and overall improved system efficiencies.

So, if you want to improve your queries or better understand what’s happening under the hood of your database, let’s explore these join types!

Merge Joins

Let’s start with a merge join.

A merge join is one of the most efficient joins—assuming the two datasets you want to join are pre-sorted. However, this requirement can cause performance issues since sorting the data first can drastically impact the overall computation.

The approach to the join is straightforward. If you take two sorted lists, how could you approach joining them?

You have a pointer for both datasets and can traverse them in the same direction. As you find matches, you store them, and once there isn’t a match, the row moves the pointer at the smallest value down.

This continues until it has processed all rows. Keep in mind that performance may vary if you’re dealing with one-to-many or many-to-many relationships(and there is also more nuance for the approach in an many-to-many situation). Take a moment to think why many-to-many might cause an issue, and if it helps, here’s some pseudocode for a one-to-many merge join, pulled from Craig Freedom’s post(assuming you’re starting with sorted inputs):

get first row R1 from input 1

get first row R2 from input 2

while not at the end of either input

begin

if R1 joins with R2

begin

return (R1, R2)

get next row R2 from input 2

end

else if R1 < R2

get next row R1 from input 1

else

get next row R2 from input 2

EndNow we will talk about nested joins soon. But Craig further had a good line about how you can think about how merge joins compare to nested joins.

Unlike the nested loops join where the total cost may be proportional to the product of the number of rows in the input tables, with a merge join each table is read at most once and the total cost is proportional to the sum of the number of rows in the inputs. - Craig Freedom

If you’d like to explore this topic further, I won’t reproduce Craig Freedom’s entire article here, but you should check it out.

Instead, I’ll provide a helpful visual representation below.

Hash Join

As the name suggests, a hash join relies on hashing. It operates in two distinct phases: the build phase and the probe phase.

Build Phase: In this phase, the typically the smaller of the two tables (often called the "build" input) is scanned, and a hash table is constructed using the values of the join key. Each entry in the hash table stores a tuple from the build input and their corresponding hash values, distributing the data evenly across different "buckets."

Probe Phase: The larger table, usually, (the "probe" input) is scanned next. The join key is hashed using the same function as in the build phase for each tuple. The hash value is then used to check the corresponding bucket in the hash table for matches. If a match is found, the tuples from the build and probe inputs are combined.

Again, pulling from Microsoft, here is pseudocode for a baseline hash join.

for each row R1 in the build table

begin

calculate hash value on R1 join key(s)

insert R1 into the appropriate hash bucket

end

for each row R2 in the probe table

begin

calculate hash value on R2 join key(s)

for each row R1 in the corresponding hash bucket

if R1 joins with R2

return (R1, R2)endnesI refer to this as a baseline because there are always edge cases to consider, such as hash collisions.

Hash Collisions

One potential issue during the probe phase is a hash collision, which occurs when two or more distinct join key values hash to the same bucket. When a collision happens, the system must check each value in the bucket to determine if a true match exists. Depending on the number of collisions and the bucket’s size, this can slow down the joint operation. However, collisions are typically kept to a minimum with a well-distributed hash function.

Hash joins are commonly used for larger table joins, as the algorithm’s time complexity is linear at O(N + M).

In theory, both Hash and Merge joins perform well with large tables. Typically, a query engine will use a hash join when dealing with large, unsorted datasets and equality joins, especially if one table fits in memory. In contrast, a merge join is often used when both tables are pre-sorted on the join key or when performing range-based joins, as it minimizes memory usage and avoids hashing.

Of course, let’s be clear, if you’ve ever run a query, sometimes the optimizer decides to do something you never expected…

With that in mind, let’s dive into nested joins.

Nested Joins

Nested joins is probably the easiest to understand. It’s akin to a “bubble sort”..so to speak. If you asked someone with little experience in algorithms to figure out a way to join tables, this is likely what they’d come up with(ok I do think some people would realize sorting or some form of index would help).

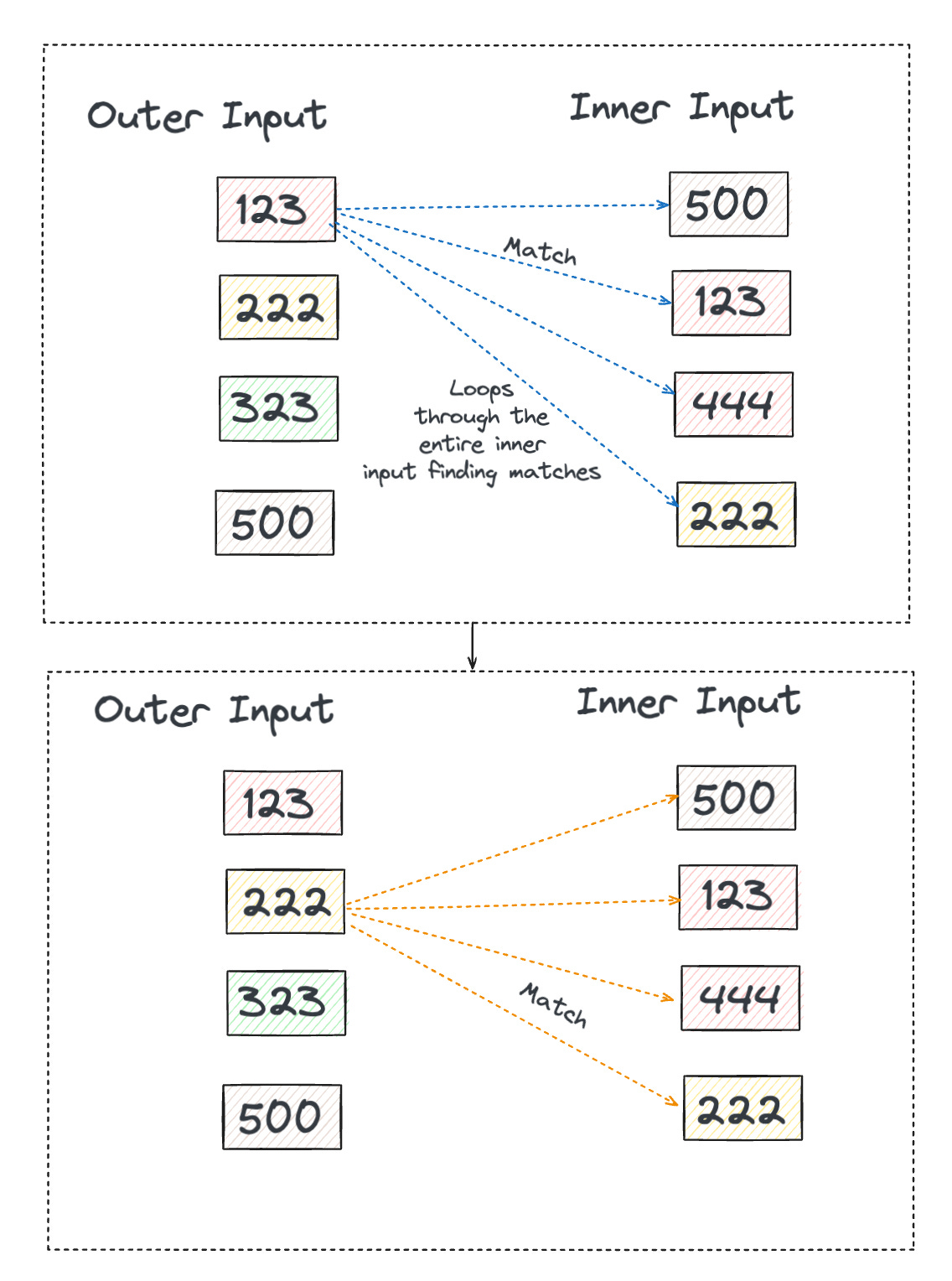

Like other joins, you have two inputs the inner and outer input tables. The system would take the inner table and loop over the entire table once for every row in the outer table. You start at row 1 of the outer table and check each row of the inner table. Then, you move to row 2 of the outer table and repeat the process.

You know, like a nested loop.

for each record in outer_input:

for each record in inner_input:

....It’s similar to the image below.

This explains why unsorted nested joins aren’t suitable for large tables, as their worst-case scenario is O(MN).

However, there are ways to improve this.

For instance, if the inner table is sorted, the join operation can more easily seek values from the outer table. The reason being that if the query engine knows the table is either sorted or indexed it no longer has to go through the entire table every time.

Once all instances of the join key are reviewed, the search for those values can end.

We’ll explore indexes and other methods that can improve these joins in a future article so don’t forget to subscribe!

What Comes After Joins?

Joins are an essential part of working with data, but most of us only know them at a high level without fully understanding the underlying processes that bring our data together. There are many other factors to consider, like indexes and their role in enhancing performance.

For now, I hope this provides insight into what’s happening behind the scenes in the database systems we all rely on.

As always, thanks for reading!

Don’t Miss Out On Day Two Of The Free Data Engineering And Machine Learning Summit!

Over 4000 people have signed up for the free and online Data Engineering And Machine Learning Summit, which is kicking off today!

If you're looking to hear from data experts about how they solve real data problems, then you're not going to want to miss out.

We have 30 amazing talks that you are not going to want to miss!

They'll cover Apache Iceberg, building data stacks, MLOps, and so much more.

So check it out here!

Special thanks to Databricks for sponsoring DEML 2024 and Accel Events for making it so easy to manage the event.

Join My Data Engineering And Data Science Discord

If you’re looking to talk more about data engineering, data science, breaking into your first job, and finding other like minded data specialists. Then you should join the Seattle Data Guy discord!

We are now well over 7000 members!

Join My Technical Consultants Community

If you’re a data consultant or considering becoming one then you should join the Technical Freelancer Community! We have over 700 members!

You’ll find plenty of free resources you can access to expedite your journey as a technical consultant as well as be able to talk to other consultants about questions you may have!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

The End of the Unstructured Data Era

In August of 2020, a team at Facebook first presented a paper on Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. This paper introduced an idea of how to augment the information retrieval of an AI model with additional data that a model could query and retrieve from external data sources. Four years later, the market has now developed the tools and ecosystem required to establish RAG as the ‘killer app’ that can usher in the value of AI to enterprises looking to embrace faster knowledge-sharing and decision-making.

So what is the challenge that enterprise RAG-based systems are attempting to solve?

As organizations start to bring AI agents into their environment, it’s clear that there are things that an agent can and does know, and there are things trained AI models can’t yet accomplish. Some challenges include:

Why Is Data Modeling So Challenging – How To Data Model For Analytics

Learning about how to data models from basic star schemas on the internet is like learning data science using the IRIS data set.

It works great as a toy example.

But it doesn’t match real life at all.

Data modeling in real life requires you fully understand the data sources and your business use cases. Which can be difficult to replicate as each business might have its data sources set up differently.

For example, one company might have a simple hierarchy table that can be pulled from Netsuite or its internal application.

Whereas another one might have it strewn across 4 systems, with data gaps, all of which need to be fixed to report accurately. So you’ll never really understand the challenge you will face when data modeling until you have to do it. Then you’ll start to understand how you should data model and all the various trad-offs you’ll have to make.

In this article I wanted to discuss those challenges and help future data engineers and architects face them head on!

From Basics to Challenges: A Data Engineer's Journey with APIs

If you've had to build any data pipelines for analytics, then you're likely very familiar with the extract phase of an ELT or ETL.

End Of Day 146

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.