Why Data Pipelines Exist

Beyond Moving Data From Point A To B

Hi, fellow future and current Data Leaders; Ben here 👋

Today will be getting back into my series on data pipelines. One question that I believe is important to answer is why? Why even build data pipelines?

But before we jump in, I wanted to share a bit about Estuary, a platform I’ve used to help make clients’ data workflows easier and am an adviser for. Estuary helps teams easily move data in real-time or on a schedule, from databases and SaaS apps to data lakes and warehouses, empowering data leaders to focus on strategy and impact rather than getting bogged down by infrastructure challenges. If you want to simplify your data workflows, check them out today.

Now let’s jump into the article!

When I first started in the data world, no one around me used the term data pipeline.

I heard terms like integrations, automations and ETL.

In fact, I am not even sure when I first came across the term. But if you’re a data engineer in this modern era, then much of your time is spent, building, maintaining and keeping data pipelines running smooth.

Even with AI, you’re probably still finding yourself opening up 3,000 line queries, and the occasional custom data pipeline system.

What a Data Pipeline Actually Does

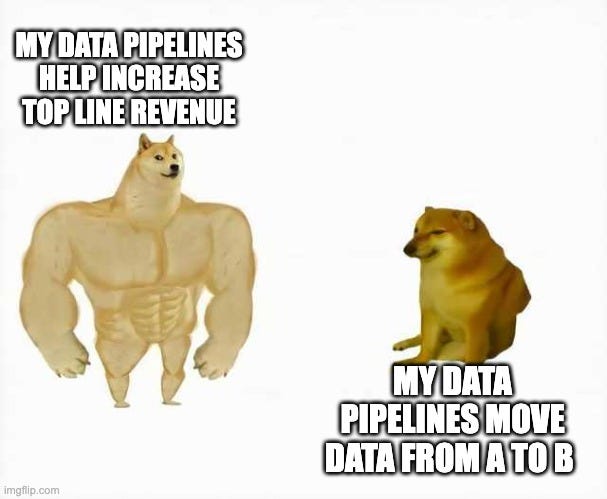

When you look at data pipelines, here is likely what people might say they do.

Move data from a source to a destination

Sometimes they transform that data

And they do all of this repeatedly and reliably without human intervention

That’s the technical function of a data pipeline.

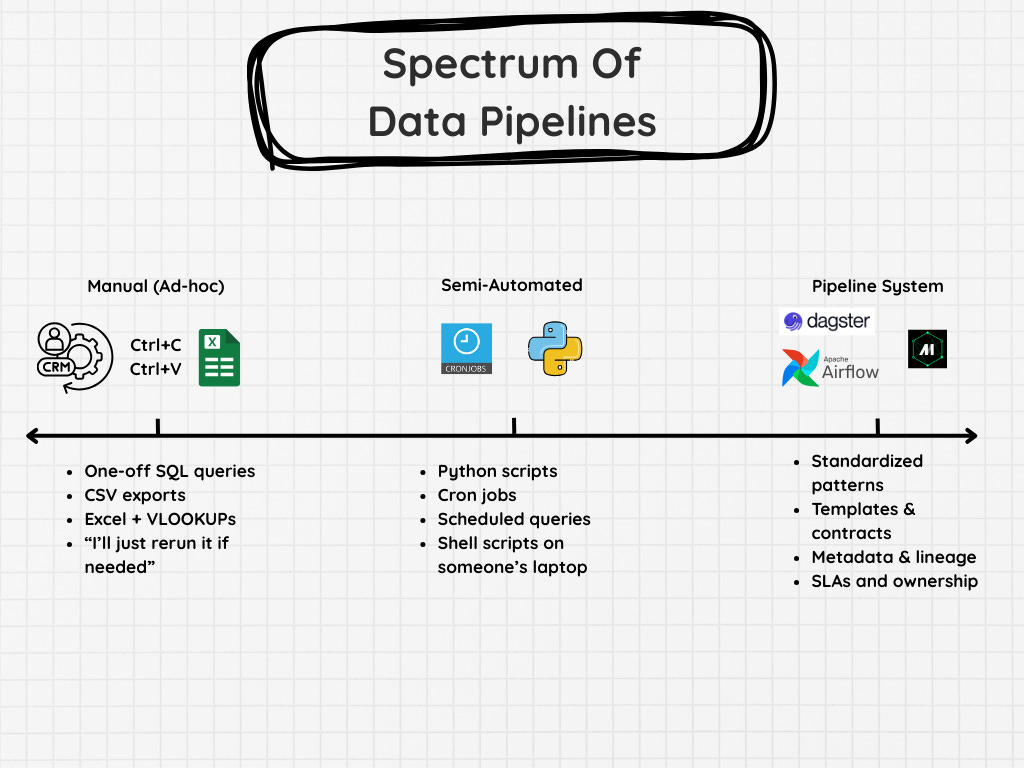

How it happens can vary.

This could be automated SQL, Python scripts, Airflow, Estuary, SSIS, Glue, and so many other tools.

But you do need to think beyond just this when it comes to data pipelines.

Pulling in a recent post from Zach Wilson.

It’s important to think beyond just moving data from A to B. And start thinking in. outcomes and ownership.

What is the data pipeline actually doing?

The Real Reason Data Pipelines Exist: Trust

We alluded to this above, but let’s talk about why data pipelines exist. Because, hey we could just manually load data into databases.

Just use:

COPY INTO analytics.raw_orders

FROM @raw_stage/orders/

FILE_FORMAT = (TYPE = ‘CSV’ SKIP_HEADER = 1)

ON_ERROR = ‘CONTINUE’;

Done!

No need to automate anything, right?

After all, we are just moving data from point A to B.

Well, there are many reasons we automate data workflows and turn them into data pipelines. Here are the key benefits we get.

Timeliness

Accuracy

Consistency

Recoverability

Scalability

But it goes even beyond just recoverability and consistency. We really are trying to make data more valuable. In order to do so, we must also consider these pillars.

Integration

Availability

Outcomes

Now I will say, not every data pipeline you build will have the goals listed above. Especially the ones listed in other benefits.

In some cases, data pipelines are merely integrated data from a CRM to another internal system other than reporting.

Still others might pull data out of your CRM, run some calculations, and reinsert it.

I am merely saying this to point out that not all data pipelines are used to push data into data warehouses. There are plenty of other reasons a data pipeline might exist.

Why You Need To Care About These

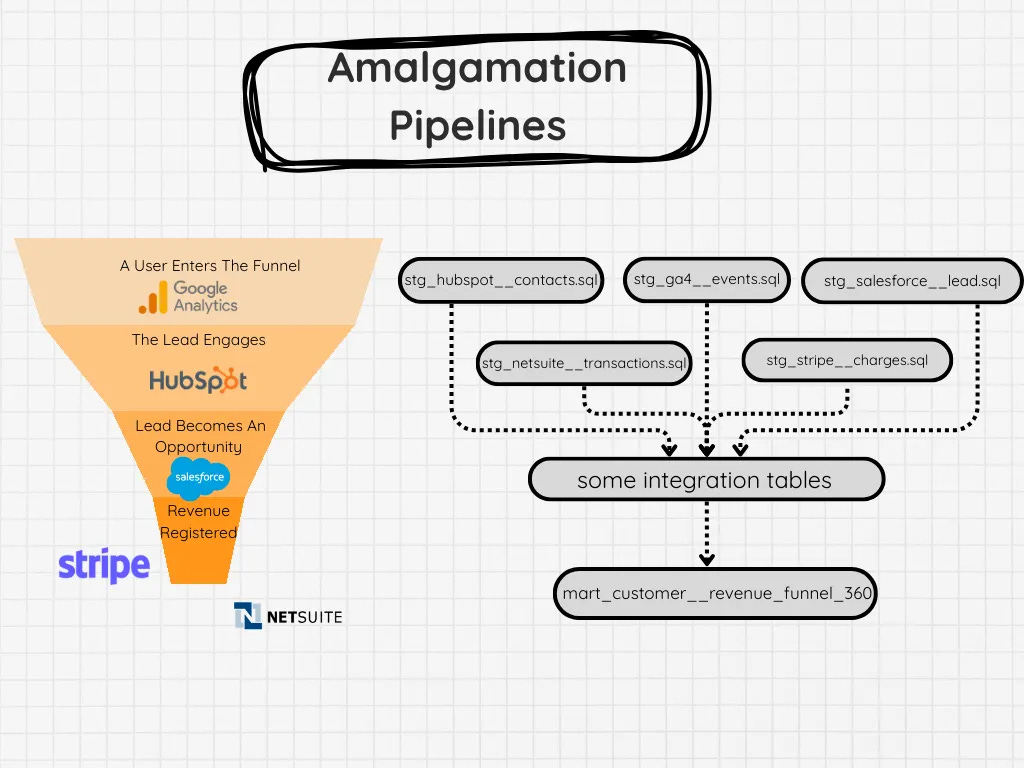

Integration

Many data teams aren’t building data warehouses; they are just replicating their databases and CRMs into Snowflake or Databricks. Just isolated siloed data that was once in separate systems, now in their own schemas and un-integrated data sets.

Part of what the data pipeline is supposed to handle in terms of logic(and as determined by the data modeling process) is the integrations. The parsing, cleaning, and adding of keys that allow you to join data across systems. This also means you’ll likely need to consider what data sets will need to join with each other in the source systems themselves.

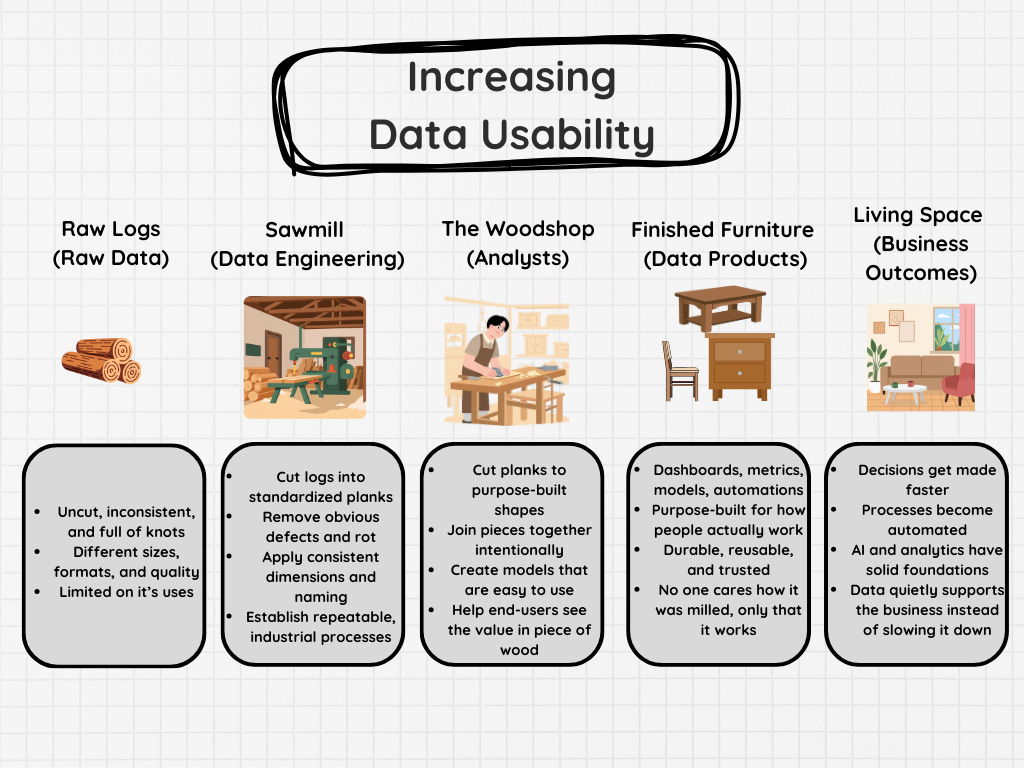

Availability And Usability

Many data workflows require data analysts go to the source systems and extract the data in an Excel, then from there they will need to manually process, set-up VLookups and build out a “database” in Excel.

Part of what data pipelines do is move data into the data warehouse making said data more easier to access.

And this is not just for end-users like analysts, but also automations and LLMs. Having data centralized means it’s easier to work with said data, especially when it’s well modeled.

As data becomes easier to access by the right users, the more they can actually use it for.

Scalability

At a certain point, having half automated scripts run by cron might be too chaotic. Sure, if you only need 2-3 simple data workflows managed. This might be fine.

But as your data use cases grow.

As your data team grows.

As the end-users of said data grows.

You’ll want data pipeline systems that make it easy to automate.

Think about having to rerun 200 data pipelines. That’s logistically difficult if you can’t easily kick all the jobs off and track their successes or failures.

Outcomes

Data pipelines can be easily built without pipelines. But I think it’s important to think about the “so what”. Why are you building your data pipeline?

Is it to automate a process, and if so, does it need to ingest data into the data warehouse?

What business goal are you hoping to drive with the building of your pipeline? Every new data pipeline you build without a clear purpose just becomes a technical liability over time. It increases cost, maintenance time, etc.

So what is your team hoping to do with the data pipeline?

Here are a few examples, you could say your data pipeline:

Reduces unnecessary discounting by analyzing win/loss data and discounts to show where deals close without price concessions.

Improves onboarding success by identifying which onboarding steps and early product behaviors correlate with long-term retention.

Reduces support costs by linking support tickets to product events to eliminate the root causes driving repeat issues.

Increases retention through proactive customer success by alerting CS teams when usage drops or support volume spikes.

Timeliness

One of the great things about data pipelines is that they are easy to track and can run whenever you need them to.

Meaning, if you need them to prepare a data set prior to 8 AM, they can do that. You know how long it’ll take(assuming nothing goes wrong, and even then, likely you can set up some level of recoverability).

An analyst doesn’t have to wake-up early to make sure the data gets processed in an Excel file. Instead, it can land in a table and be picked up as needed.

Accuracy

We in the data world love talking about data quality. Well, the data pipeline is one of the many places where data can be transformed improperly. Data can be duplicated, removed, and or altered in such a way that it is no longer accurate.

Data pipelines are a great place to check for data issues.

This can occur before even processing the data to check that the source contains the expected fields and ranges of data. From there, as you transform the data throughout your pipeline, you’ll likely need to implement other checks.

Consistency

The problem with “Excel data pipelines” is they offer room for errors. You copy and paste the wrong data set or forget to update a formula.

A programmed data pipeline, is repeatable and consistent.

You can create logic to check for errors like the wrong data being inserted or if dimensional data is missing. So even if you do have some issue, it can flag it early. It also helps avoid a fat-finger issue where someone accidentally thumbs in a number.

Recoverability

Sometimes, the wrong data enters a data workflow. We want to be able to detect that and then be able to rerun our data processes easily without having to worry about what else could go wrong.

We don’t want to worry about duplicate data.

We don’t want to worry about a small step being missed.

So having the process codified ensures we know exactly what will happen in terms of data tables being populated.

Final Thoughts And What Is Coming

Data pipelines are everywhere in companies. They take many different shapes and forms, but overall, their goal is to do more than just move data from point A to B.

In case you missed my last article, we’ve already covered some of the various data pipelines that exist(I even labeled one the Excel Data Pipeline in the past).

In the next few articles, I’ll be covering several other key data pipeline topics:

Backfills: The Thing Everyone Avoids Until It’s Too Late

Building your first data pipeline, from Excel to Airflow

Incremental vs Full Refresh Pipelines

Daily Tasks With Data Pipelines - Data Quality Checks And The Problem With Noisy Checks

Articles Worth Reading

There are thousands of new articles posted daily all over the web! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

When Did “Rock” Become “Classic Rock”? A Statistical Analysis

I first grasped the strangeness of the term “classic rock” while listening to a pop-punk song. In 2004, Bowling for Soup topped the charts with “1985,” a track about a frustrated housewife nostalgically longing for her Reagan-era youth.

During the song’s bridge, our protagonist laments cultural change: “She hates time, make it stop. When did Mötley Crüe become classic rock?” It was at this moment that I—a teenager—first understood the peculiarity of genre:

Apparently, there was a band called Mötley Crüe.

This motley crew was initially classified as one genre—rock—before being reclassified as “classic rock.”

Contrary to my longstanding belief, “classic rock” was not etched into the Ten Commandments—it was a contemporary radio format, likely devised by someone in public relations.

Snowflake vs Databricks Is the Wrong Debate

Over the last few years, Databricks has been executing a strategy to take over the entire data workflow.

Maybe it never started that way.

Maybe when they first came out, they only ever planned to be a managed Spark solution. But I have a hard time believing that, mostly because I believe their leadership has the vision and capabilities to see far beyond that.

Databricks has always been pretty upfront that they want to be the end-to-end data stack. But they’ve been approaching it piece by piece.

Or should I say role by role?

Obviously, at first, their focus was on the data scientist and ML engineer.

End Of Day 210

Thanks for checking out our community. We put out 4-5 Newsletters a month discussing data, tech, and start-ups.

If you enjoyed it, consider liking, sharing and helping this newsletter grow.

Coding agents completely shifted the way we build software. If your job is to "just move data from point A to point B", your are just a slow and expensive AI agent.

I've been slinging data around for a while and it was never just moving data from A to B.