Using The Cloud As A Data Engineer

Looking Into How You'll Use AWS

Cloud service providers such as AWS and GCP offer hundreds of services, and sometimes it can be a little confusing to figure out what solution does what and how data engineers and data scientists might use them.

In this article, I wanted to discuss some of the services that are useful to know as a data engineer as well as provide a combination of real-world examples where I have used said services. I’ll also only be focusing on AWS for now.

So let’s dive into the cloud services you should know as a data engineer.

Lambdas and Serverless Functions

Let’s say you want to automate a task like loading a new file when it lands in S3 or automatically running a script at midnight.

Lambdas and other serverless functions can be a great solution here, especially if you don’t have a complex data pipeline. Instead of setting up Airflow or another orchestrator, you can write a simple script that can then be placed into a Lambda.

For example, as I referenced earlier, you might have a script that is triggered every time a new file lands in S3 or you could set it to run daily using EventBridge.

Like the script below that scrapes Youtube data.

| import requests | |

| from googleapiclient.discovery import build | |

| import boto3 | |

| from io import StringIO | |

| import csv | |

| api_key = 'REPLACE WITH YOUR API KEY, usually in AWS key service' | |

| youtube = build('youtube', 'v3', developerKey=api_key) | |

| def fetch_video_details(video_id): | |

| # Fetch detailed information about the video | |

| video_response = youtube.videos().list( | |

| id=video_id, | |

| part='snippet,statistics,contentDetails' | |

| ).execute() | |

| if video_response['items']: | |

| video_data = video_response['items'][0] | |

| details = { | |

| 'Title': video_data['snippet']['title'], | |

| 'Published At': video_data['snippet']['publishedAt'], | |

| 'View Count': video_data['statistics'].get('viewCount', '0'), | |

| 'Like Count': video_data['statistics'].get('likeCount', '0'), | |

| 'Dislike Count': video_data['statistics'].get('dislikeCount', '0'), # Note that dislike counts may not be available due to YouTube API changes | |

| 'Comment Count': video_data['statistics'].get('commentCount', '0'), | |

| 'Duration': video_data['contentDetails']['duration'], | |

| 'Video ID': video_id | |

| } | |

| return details | |

| else: | |

| return None | |

| def lambda_handler(event, context): | |

| #the code below pulls out channel ID from the event | |

| #channel_id = event['channel_id'] | |

| #you could also hard code it to make sure everything is working | |

| channel_id='REPLACE WITH YOUR CHANNEL ID' | |

| # Get upload playlist ID | |

| channel_response = youtube.channels().list(id=channel_id, part='contentDetails').execute() | |

| playlist_id = channel_response['items'][0]['contentDetails']['relatedPlaylists']['uploads'] | |

| # Fetch videos from the playlist | |

| videos = [] | |

| next_page_token = None | |

| while True: | |

| playlist_response = youtube.playlistItems().list( | |

| playlistId=playlist_id, | |

| part='snippet', | |

| maxResults=50, | |

| pageToken=next_page_token | |

| ).execute() | |

| videos += [item['snippet']['resourceId']['videoId'] for item in playlist_response['items']] | |

| next_page_token = playlist_response.get('nextPageToken') | |

| if next_page_token is None: | |

| break | |

| csv_output = StringIO() | |

| fieldnames = ['Title', 'Published At', 'View Count', 'Like Count', 'Comment Count', 'Duration', 'Video ID'] | |

| writer = csv.DictWriter(csv_output, fieldnames=fieldnames) | |

| writer.writeheader() | |

| for video_id in videos: | |

| video_details = fetch_video_details(video_id) | |

| if video_details: | |

| writer.writerow(video_details) | |

| print(f'Video details fetched for {video_details["Title"]}') | |

| # Reset the pointer to the start of the string | |

| csv_output.seek(0) | |

| # Upload the CSV to S3 | |

| s3_client = boto3.client('s3') | |

| s3_bucket_name='REPLACE WITH YOUR BUCKET NAME' | |

| s3_file_key = 'youtube_video_details.csv' | |

| s3_client.put_object(Bucket=s3_bucket_name, Key=s3_file_key, Body=csv_output.getvalue()) | |

| print(f'Successfully uploaded the CSV to s3://{s3_bucket_name}/{s3_file_key}') | |

| return { | |

| 'statusCode': 200, | |

| 'body': 'CSV uploaded successfully' | |

| } | |

Now once the file above lands in S3 you can set up another event to load said file into a data warehouse. Of course, with every new task, we start pushing the need for a more sophisticated orchestrator.

I have used these multiple times as they really are simple to set up and thus far, have been reliable.

S3

Now in the prior example, I referenced S3 or Simple Storage Service(one of AWS’s first services offered).

As the name suggests, it's a place where you can store just about any form of data, such as images, videos, text files, parquet, CSVs, etc. As a data engineer, you’ll likely use it for a few different reasons including:

Storing raw data to load into your data warehouse

You could use a Lambda + EventBridge as pointed out above to load into your data warehouse

You could also use Snow Pipe and AWS SQS(if you’re DW is Snowflake)

And honestly dozens of other solutions

As the basis for your Data Lake or Data Lake House

Set up a file drop location where external partners might send data(although if data sharing is possible, that’d likely be better)

Storing semi-structured and unstructured data for data scientists and ML engineers

And plenty of other use cases

In the end, that data you’re storing may be used in the next use case.

Amazon Athena

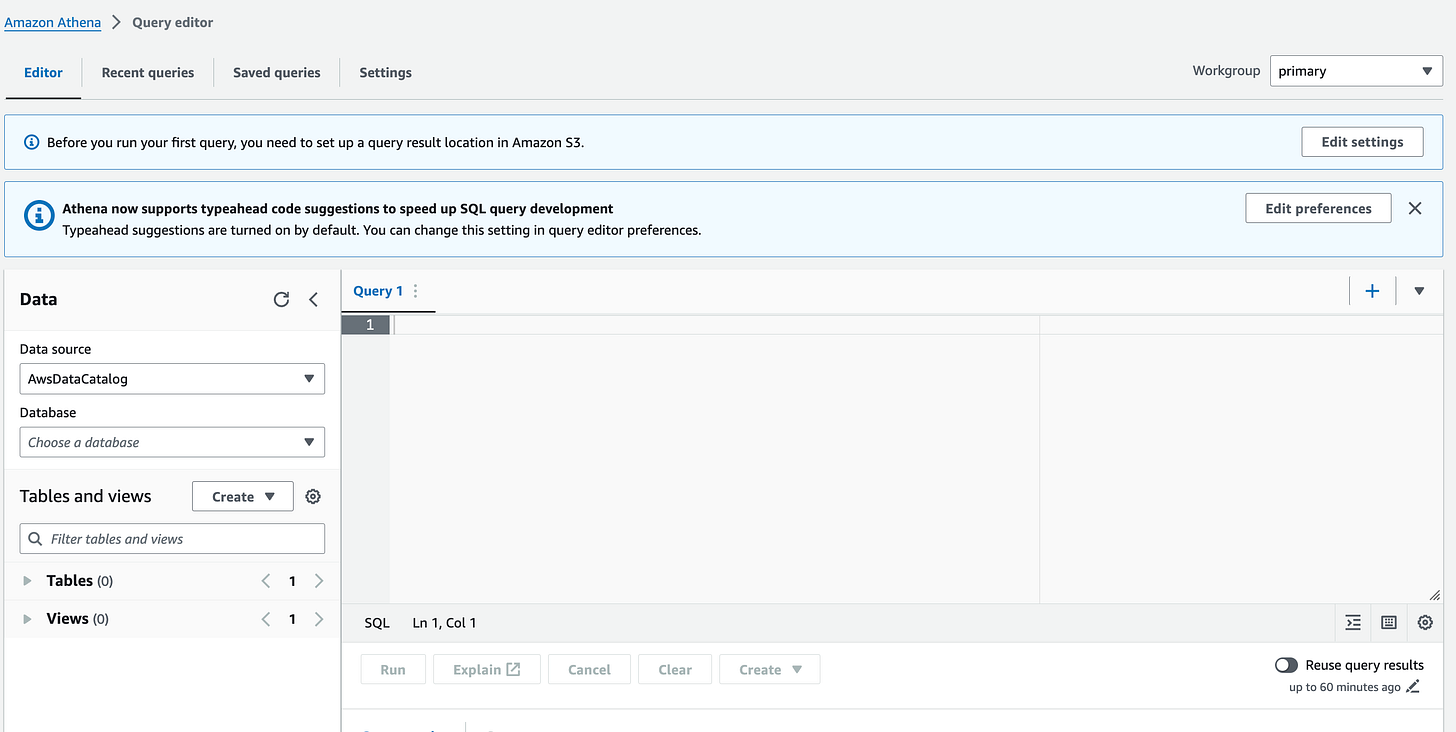

A perhaps less frequently referenced service that AWS offers is Amazon Athena.

It’s built on top of Trino and Presto and offers a serverless query service allowing the data engineering team to directly analyze the data stored in Amazon S3 or dozens of data from other sources using SQL without the need to set up any servers or data warehouse.

Here are a few benefits you get when querying data using Athena.

Federated Queries: Athena supports federated queries, which allow you to run SQL queries across data stored in relational, non-relational, object, and custom data sources(such as MongoDB, Apache HBase, and more).

Serverless Querying: Meaning there really isn’t infrastructure or updates to manage. You can simply click “Set Up SQL Editor” and get started.

Cost-Effective Analysis: Since Athena is serverless, the team pays only for the queries they run, based on the amount of data scanned by each query. This pricing model is cost-effective for intermittent or ad-hoc analysis needs. I will point out that there are several data warehouse solutions that also offer similar benefits.

As referenced earlier, Athena generally sits on top of S3 which is what I have seen multiple clients do. In fact, for one example the client wanted to analyze their logs(both to detect anomalies and analyze user behavior) which were loaded in real-time into S3, and since the queries were relatively simple and didn’t need to run daily, using Athena was a cheaper option compared to reloading the data into another solution such as BigQuery.

MWAA

There have been a lot of shots taken toward Airflow during the past few weeks, but a lot of data engineers rely on it. So whether you like it, are migrating away from it or are indifferent, it’ll likely be around for a while.

Suppose you want to build a more sophisticated set of tasks than what we built in the Lambda section of this newsletter. You have multiple dependencies, and the idea of a DAG (directed acyclic graph) sounds good to you. Well, more than likely you might run into Airflow (or one of the many solutions currently trying to replace it).

But just because it’s open source doesn’t mean you have to self-host. If you find yourself spending too much time trying to figure out Airflow’s correct configuration and not spending enough time actually delivering useful data sets, then MWAA is likely a good choice (if you like using Airflow). I have helped several clients set up MWAA or its GCP counterpart Cloud Composer, which with a few clicks can have a fully running Airflow instance.

Obviously, you lose some flexibility with a managed version, but thus far, most clients I have moved to a managed Airflow version have been able to work under the limitations that come from not self-hosting. Of course, I’d say for every client I have set up a managed Airflow version there is another that I helped spin up a self-hosted version.

Overall, this is just the tip of the iceberg of useful cloud services that you’ll likely learn as a data engineer.

Now some people would point out that I didn’t reference, for example Redshift, which is AWS’s data warehouse service. I also didn’t reference EMR, RDS (although you’ll likely just be connecting to said databases), Amazon Database Migration Service, AWS Glue, and several more which I’ve included in the image below. All of which I have used to help clients manage their data flows.

And, of course, this is just AWS; both Azure and GCP offer comparable services such as Cloud Composer, BigQuery, all the IAMs, and Azure Functions, just to mention a few.

The key is not to get overwhelmed. Don’t try to learn all the clouds at once or try to learn every cloud function. Instead, you could take on small mini-projects where you just try to load data from your local computer onto S3 and have a Lambda pull that into Redshift or Snowflake.

Even if you don’t end up doing anything else, you’re that much more familiar with it!

With that, I want to say thank you to my readers. See you in the next one!

State Of Data Engineering And Infrastructure 2023!

If you missed it, last year we had well over 400 people fill out a survey to help provide insights into the data world. If you’re interested in participating, then please check out the survey here!

We would love to have 1000(currently we have 300!) people fill out the survey this year so we can share with our readers. You can also check out the articles from last year here.

Data Events Coming Up…

Best Practices for Implementing - Change Data Capture in a Data Pipeline - Feb 13th Virtual

Walking Through Data Infrastructure Migrations - With Real-Life Examples - Feb 20th Virtual

Why You Suck At Networking (And The Right Way To Do It) - Feb 27th Virtual

Join My Data Engineering And Data Science Discord

If you’re looking to talk more about data engineering, data science, breaking into your first job, and finding other like minded data specialists. Then you should join the Seattle Data Guy discord! We recently passed 4000 members!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Keep a brag list of the wins you achieved, thank me later

It’s really important to work and focus on things that are impactful and try to provide as much value as possible to the organization, but it’s also very important to note down what you achieved and have it ready whenever someone asks you for your achievements.

But not just that, keeping a list of all your achievements is also very good for yourself and your motivation! Let’s get more into the “why” next.

Sponsored: Query from deep storage: Introducing a new performance tier in Apache Druid

In the realm of building real-time analytics applications, data teams often face a familiar challenge: the need to still retain extensive historical data, from months to even years, for occasional but valuable analytics. While the bulk of queries need to be very fast on a smaller, fresher dataset, the ability to query this older data still serves purposes such as long-term trend analysis and report generation.

In the past, Druid users would have to load all the data they ingested onto Druid’s data nodes, to ensure sub-second query performance. This meant a potentially large memory and local storage requirement to retain historical data, even if you don’t often have to query all of that data as it ages.

Normalization Vs Denormalization - Taking A Step Back

Denormalization and normalization are core ideas you should know about if you are a software or data engineer. If you touch a database, whether it’s for analytics or it’s a document-oriented one, there are key concepts you should be aware of. Data modeling is Dead

End Of Day 114

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

In the "You could use a Lambda + EventBridge as pointed out above to load into your DQ" what does "DQ" mean or stands for: data query, data ...?

Great overview of different possibilities you can do in AWS. Appreciate including also the script that fetches yt videos and stores their info in S3.

And of course, thank you for including the link to my article!