Hi, fellow future and current Data Leaders; Ben here 👋

I recently was skimming the data engineering subreddit and came across a post with a title that was hard to ignore. Reading it and the comments reminded me of the importance of due diligence in the data engineering world.

Before doing that, I wanted to let you know that in July, I’ll be hosting several webinars and discussions with data leaders about problems that extend beyond just technology (although we’ll likely touch on some infrastructure topics). So, if you’re interested in growing in data you can find the seven free webinars listed below.

Now, let’s jump into the article!

“I just nuked all our dashboards.”

That’s the title of a heavily discussed post on the data engineering subreddit(Post and link included at the bottom of this section). When I first read it, I assumed the author was going to talk about deleting all their dashboards, no one noticing, and then wondering if their job even mattered.

That wasn’t the case.

Instead, it was a stomach-turning story, summarized perfectly by a commenter:

A data engineer misunderstood their environment, double-checked their logic… with ChatGPT… and accidentally dropped production tables right before the weekend.

It’s the kind of post that makes you queasy, even if you’ve never made the same mistake.

Let me be clear: I’m not here to make fun of them.

If you’ve worked in data you’ve likely done something that wasn’t well thought out where you didn’t think about the consequences.

One Wrong Command Away

When I worked at Facebook, we didn’t have separate environments for testing and production in the data warehouse. All the tables lived side by side. What kept you from dropping something important was usually just a table prefix: TEST_ if you were playing it safe, or in my case, ROGO_TEST.

Every time I dropped a table, I’d read the name out loud like some ritual, hoping it would protect me from accidentally erring and wiping out a production table. Sure, the prod tables could be restored, but I never wanted to find out how.

At other companies, your only guardrail might be the schema name tucked in the top-left of your query editor. That’s it.

The point is that mistakes happen. And they happen even faster in systems that are brittle, complex, and/or poorly documented.

The Importance Of Due Diligence In Data Engineering

One comment on the post really stood out to me: it brought up the idea of due diligence, a term that should be baked into every data engineer’s vocabulary.

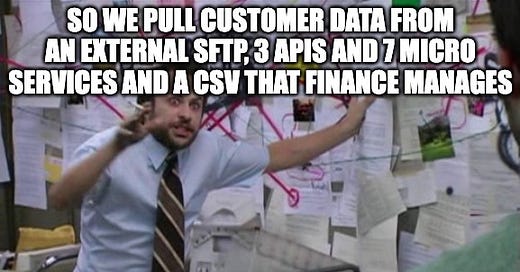

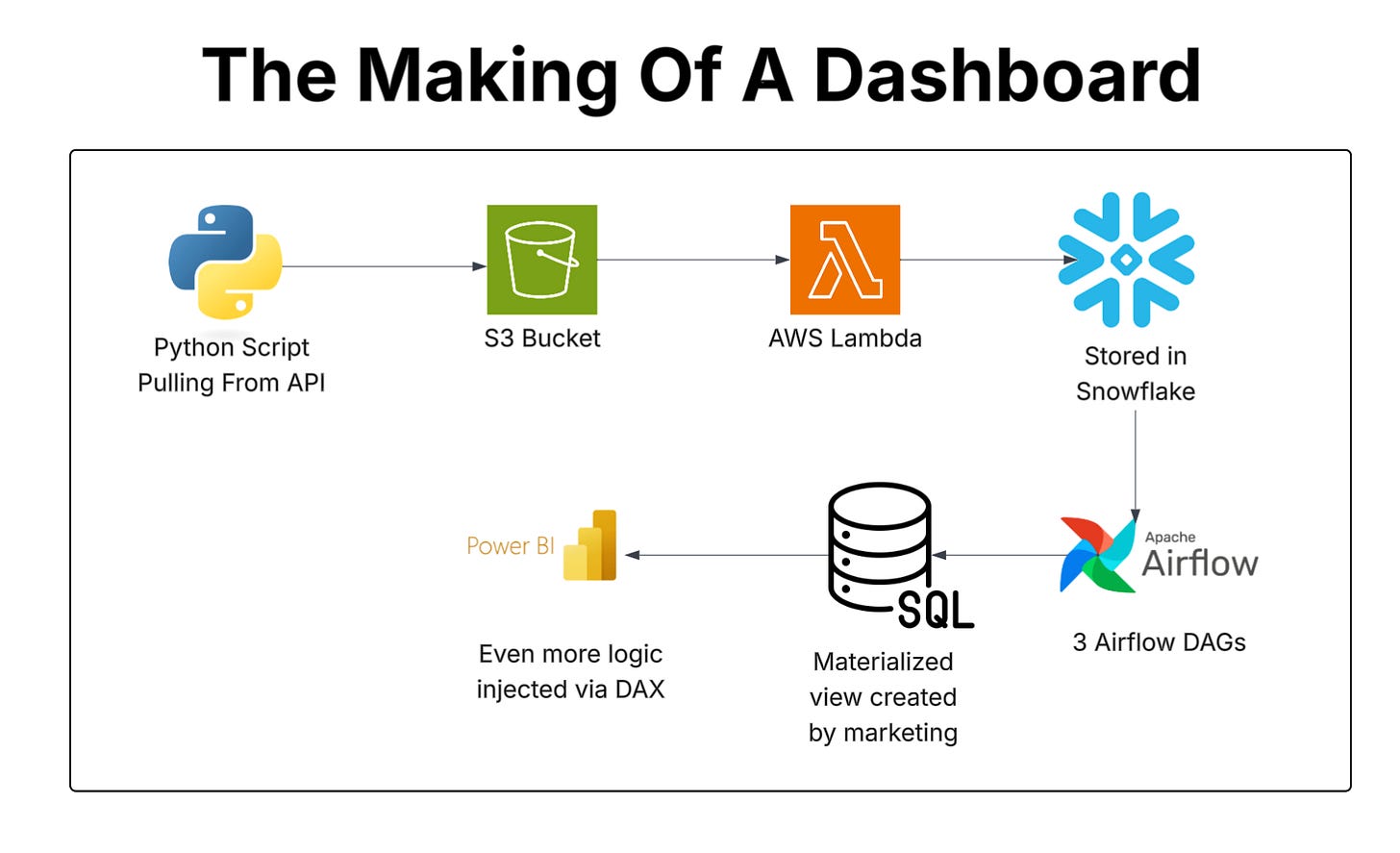

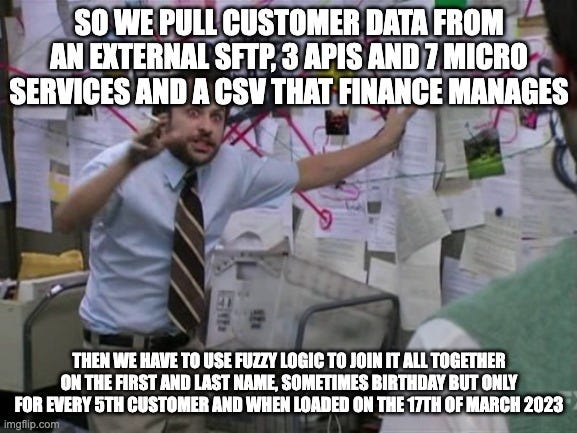

Say you want to tweak a simple field. That change might travel through a pipeline like this:

A Python script hits an API and drops raw JSON into an S3 bucket

An AWS Lambda function ingests it into a staging area

Airflow DAGs push it through five transformation steps

That data joins five other datasets built from similar prior processes in a 5,000-line query

The results then feed a materialized view that marketing processes again

Then it gets surfaced in Power BI, where DAX adds even more layers

And you’d better hope you don’t have to rerun that pipeline, because it takes 12 hours.

Meanwhile, the business is left wondering why even a minor change takes so long to show up on their dashboard.

Should a data stack look like this? Probably not.

Is it the reality for many teams? Absolutely.

That’s why due diligence is crucial, even for a tiny change. As a data consultant, I often spend the first few weeks just tracing how data moves from point A to point B, then C, and somehow all the way to Z.

And even after digging through diagrams and code, there are still gaps! Gaps you can only fill by talking with other teams and engineers.

The Key Person Problem Continues

I’ve written before about the key person problem. So many companies have a single individual who knows exactly how the entire data workflow functions, front to back.

Then they leave. And the new junior data engineer steps in… and accidentally drops all the tables.

This usually isn’t about technical skill per se. You can be well-versed in Airflow, Snowflake, Databricks, Apache Iceberg—you name it.

But there’s all this glue knowledge that exists, another article I’ve been meaning to write about. Glue Knowledge is the stuff we take for granted as engineers: the little tidbits picked up over years of experience. It includes how some obscure data element is created or processed, where a specific bit of data really comes from, and how the spaghetti of data pipelines actually interacts under the hood.

When a senior engineer leaves, a lot of that knowledge goes with them. The business might assume a junior can just pick up the slack, but if you’re losing someone who’s been there for three to five years, you’re also losing a ton of tribal knowledge.

Stuff that probably isn’t captured in the documentation. Stuff an LLM can’t easily learn.

But hey, the business thinks, no big deal—these tools are so easy to use now, right?

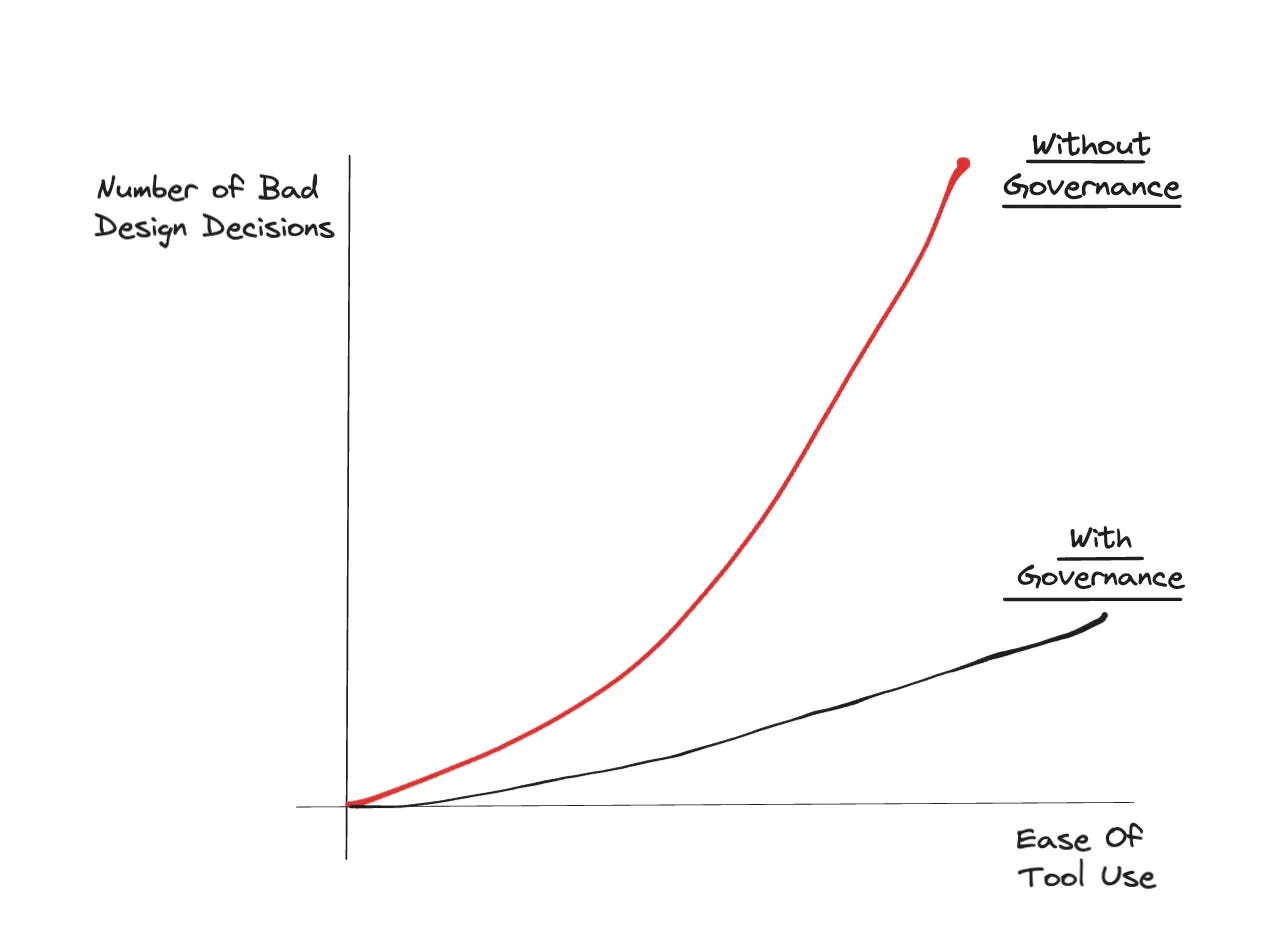

It’s So Easy, It’s Dangerous

One thing that can obscure what data engineers and other knowledge workers actually do is the rise of low-code tools and managed cloud data platforms. Do these solutions remove technical friction?

Yes, they do.

But they don’t remove the need to think. You can try to outsource some of that thinking to ChatGPT, and it might even fill a few gaps. But there’s a real danger in not being able to reason through a workflow yourself.

Low code solutions help you make bad decisions faster.

Just because you can build a data pipeline or a dashboard in ten minutes doesn’t mean you should.

Every new pipeline, every dashboard, every materialized view is a liability:

One more thing that can break

One more place for business logic to get duplicated

One more dependency someone will rely on (and be confused by) when it silently goes stale

These tools reduce the barrier to creation, but they don’t reduce the need for design. They don’t replace careful review. They don’t answer the question:

“What problem are we actually trying to solve here?”

And they certainly won’t save you from making a bad decision—like dropping tables on a Friday without understanding the consequences.

Take Aways

If you're new to data engineering or are just looking for some takeaways, here they are:

Tools can help remove technical friction, but they don't remove the need to think through consequences.

Every new pipeline, dashboard, and bit of code can and will become a liability.

Don't just remove something, like a table or a bit of code, because you can't figure out what it does. Consider Chesterton's Fence. If you can't explain why something exists, it may be a bad idea to remove it, so put a little extra effort into it before going straight to the Scream Test.

A healthy engineering culture with a mix of senior and junior engineers fosters better practices, knowledge sharing, and collective learning. In turn, this can reduce the risk of critical oversights and ensures everyone understands both the "how" and the "why."

Final Thoughts

Maybe someday in the future, we’ll live in a world where data is dumped unstructured into S3 buckets, and you can simply ask your AI agent to scan 100 TBs of random, messy slop and magically get the right answers, aggregating exactly the data points you need.

But for now, and I’d bet for a good while, given the systems I’ve seen, you still have to think through your designs and actions when ingesting and processing data.

The engineer who nuked the dashboards made a mistake.

It was the predictable result of a system that makes it too easy to build, too hard to understand, and too tempting to trust surface-level validations.

We’ve all been one bad assumption or rushed decision away from a Friday night disaster.

As always, thanks for reading!

Don’t Miss Out On These 7 Free Data Leader Webinars Coming Up In July

What Every Data Leader Gets Wrong About Optimization with Paul Stroup

Building Streaming Pipelines for Real-Time Analytics with István Kovács

From Reactive to Proactive: Managing Stakeholders to Unlock Strategic Data Work with Celina Wong

Accelerating Delivery: Augmenting IT Teams with Professional Services with Jody Hesch

Integrating ML Workflows Into Data Pipelines with Jeff Nemecek

Beyond the First Use Case - Building Data Platforms and Processes for Long-Term Adoption with Patrick Taggart

Join My Technical Consultants Community

If you’re a data consultant or considering becoming one then you should join the Technical Freelancer Community! We have over 1700 members!

You’ll find plenty of free resources you can access to expedite your journey as a technical consultant as well as be able to talk to other consultants about questions you may have!

Articles Worth Reading

There are thousands of new articles posted daily all over the web! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Apache Iceberg Time Travel Guide

Apache Iceberg is a table format built for large-scale analytics. It brings ACID transactions, schema evolution, and time travel to data lakes. Unlike traditional data lakes, Iceberg tables maintain a complete history of changes, allowing users to query historical versions without restoring backups or creating new tables.

Time travel in Iceberg enables:

Point-in-time queries: Retrieve data as it existed at any past snapshot.

Change tracking: Compare historical data versions to analyze trends.

Rollback and recovery: Revert to an older snapshot to fix errors.

Audit and compliance: Ensure data integrity by querying past states.

This guide explores how to use time travel with Apache Iceberg in PySpark, demonstrating real-world scenarios for data engineers.

Just getting started with Apache Iceberg? Check out our beginner's guide here.

From IC to Data Leader: Key Strategies for Managing and Growing Data Teams

There are plenty of statistics about the speed at which we are creating data in today’s modern world. On the flip side of all that data creation is a need to manage all of that data and thats where data teams come in.

But leading these data teams is challenging and yet many new data leaders get very little training. Instead they are just thrown into the mix.

There is just the expectation that they will do a good job because we were a good individual contributor.

So I wanted to start to put together a list of what you need to know and do to be successful as a leader in the data space.

This ranges from taking even more time to understand the business to being intentional on how you hire and place talent.

So if you’re thinking of leading a data team or perhaps already are this is for you!

End Of Day 185

Thanks for checking out our community. We put out 4-5 Newsletters a month discussing data, tech, and start-ups.

If you enjoyed it, consider liking, sharing and helping this newsletter grow!

I’d just add that the issues you highlight are endemic to all software development, not just to data engineering.

Wow, quoting Chesterton in a data article? Amazing! Nice work.

Now the question - isn't separation of duties the simple governance solution?

Great article, thanks!

ZH