Is everyone’s data a mess?

Recently, I came across a post in the data engineering subreddit that asked the question.

The answer is yes, but no.

As someone who has seen data infrastructure at FAANGs, Enterprises, start-ups, and every other company in between, all companies need to make some concessions that can build up and become messy over a long period of time.

So let’s discuss some of the causes of data infrastructure becoming messy and how some companies are trying to deal with it.

It All Starts At The Source

Many of the woes that data teams suffer start at the source and it’s not just that source data can be inaccurate.

But it’s generally the first issue most new data engineers and data scientists will notice. Here are some more fleshed-out points on where the data source can go wrong.

Inaccurate Data - There are so many ways bad data can enter systems. It could be at the software level where the code itself creates inaccurate data or users could insert the wrong data. I always use the example of state abbreviations because I worked at a company where we found that 1% of our state abbreviations did not exist. They neither represented states nor territories in the US and the company only operated in the US. Thus, basic data quality checks and systems need to be put into place.

Multiple Data Sources - If you’ve ever worked with a company trying to capture a process that their business manages, you’ll quickly find it spans multiple sources. This could be project management, customer management, and just about everything else. Meaning, often, data on a specific user or employee exists across all of these sources and needs to be stitched together. In a perfect world, it’s all integrated, but that’s not always the case. Instead, you’ll likely have to figure out ways to join together various data sources to be accurate.

Data Source Changes - One of the biggest complaints many data engineers have is that source systems change without them knowing. This is why there are such strong believers in the concept of data contracts. It’s because these small changes, even when it’s just a data type can take down complex machine learning models that weren’t perhaps built with the most robust expectations and assumptions.

Data Models - Everything really starts with the source system data model. Not just in terms of how the data is normalized but also what data columns and values exist. Small changes to a data source can make a massive difference. For example, let’s say your data source has the ability to change transactions after they have occurred. Well, if you just have a created_date field, you can’t really tell what has changed. But adding in a last_modified_date field, lets you know when you should re-pull data. This of course is not even covering some of the business logic that is often obfuscated into the application itself and thus needs to be replicated in the ETL rather than simply pulling the data in as is.

It all starts with the source. But from there, we data people can do a lot of things to make the end systems far messier. Truthfully, it all just builds on each other.

Sharepoint Lists, Google Sheets and CSVs

When I began at Facebook, we had the ability to create dynamic look-up tables that could be managed by a simple UI and fed into a MySQL table. It was a great way to manage those tables that you really only need to update once every so often. It was great because we had pipelines that could easily pick these tables up. No need to write custom code to read from a Google Sheet, which could be updated, or have someone accidentally start inserting data incorrectly. But then, one day, it was sunset.

So suddenly, analysts wanted some way to update their VLOOKUP-like tables in their dashboards without manually loading said data. Google Sheet processes started turning up everywhere.

And prior to working at Facebook, I was accustomed to working with Sharepoint lists to perform a similar process.

Although this works, It poses a lot of risks. What if the analysts or operations person who manages said sheet leaves? Do you think they’ll remember to tell the person taking their place to keep updating the sheet?

Also, what's the chance something goes wrong, like someone adding a new column?

There are just so many opportunities for failure. But at the same time, the other choice is to wait three months for a new lookup table to be added.

Thus, in this case, you’ll need to balance the risk vs. reward trade-off. In the short term, perhaps a custom Google Sheet works. But eventually, it might be worth creating a more reliable process.

Easy Doesn’t Mean Better

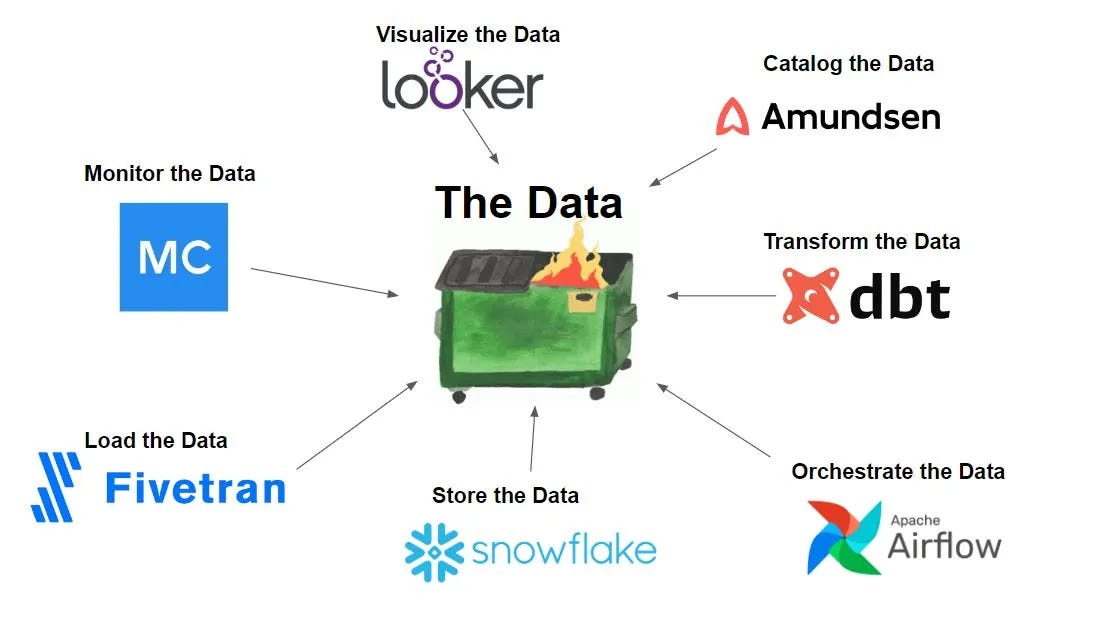

There are a lot of tools that have been developed to make data work easier. It’s part of why I started advising several companies; I see them making data work easier.

BUT.

Easy doesn’t mean better. Making the building of data pipelines faster can lead to insights faster, but it can also lead to your entire data infrastructure being a mess sooner. You’ll start finding spaghetti data pipelines everywhere.

Just because you can spin up data pipelines and workflows quicker doesn’t mean you should, at least not without planning the system as a whole. But that’s often what happens. Perhaps this is due to poor planning or just the business’s demand for data. The point is that speed in initial development isn’t the goal for data or technical projects. As software and data engineers, we are trying to develop systems that are both robust but easy enough to change. These are complex attributes to balance.

I was talking with one director of data at a large company recently, and they have built a system where data engineers manage their core data infrastructure. These data sets move slowly and are developed to be reliable. They also allow data analysts and scientists the ability to build as much on top as they want.

Furthermore, they analyze query patterns to see if they need to build any other stable data sets based on common queries. Actually, this was a project we discussed taking on at Facebook for the very same reason.

So I do believe easy is good, but finding a balance between reliable data sets and speed to insights is important.

More Governance Doesn’t Always Lead to Cleaner Systems

Data governance is everything you do to ensure data is secure, private, accurate, available, and usable. It includes the actions people must take, the processes they must follow, and the technology that supports them throughout the data life cycle. - Google Cloud

The problem with some data governance implementations is that despite all the good they can do, they can also slow the data and analytics teams down so much that they will just build around them.

This is where shadow IT teams will be built, along with duplicate data warehouses and dashboards that don’t align themselves with the rest of the business's metrics.

And I say this as someone who has seen it happen. I was on the Shadow IT team that built processes and dashboards outside the centralized data team. It was my first job out of college, and my director and senior engineer wanted multiple processes and workflows built. So we constructed them.

A few months later, once the senior engineer left, we had the data warehouse and data governance teams knocking on our door.

And the question becomes, if teams feel like they need to go through all the extra trouble of building around data warehouses built to make data easy to access, was the entire data infra and processes set up correctly from the start? Or did the system in itself breed the action of shadow IT and duplicate development?

Overall, all of these systems have a massive amount of human intervention, which is likely the main driver from all sides.

Trying To Manage Chaos

There are a lot of reasons why data infra is a mess everywhere. However, I believe solid data teams are constantly balancing what gets the job done and what is maintainable. Part of this is just due to the fact that so much can change that even once you have built a robust set of data pipelines and tables, they are suddenly already in need of updating.

And yet data teams make things happen. A solid data team will build systems that are built to manage change and processes and workflows that help facilitate said change not only to the technical assets downstream but also to the business stakeholders.

Thanks for reading!

Do You REALLY Need A Data Warehouse - What Are The Benefits Of A Data Warehouse?

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

What Most People Don't Get About Machine Learning Engineering

When I first entered the data field, there was no such thing as machine learning engineering. The roles available were data engineering, data science, and data analysis. Since then, the machine learning engineer role has rapidly progressed and will only continue to grow in popularity, especially with large language models. However, this popularity has also highlighted, at least for me, the disconnect between what the industry needs and the traditional definition of the role.

Building A Million Dollar Data Analytics Service

Some companies focus 100% on selling data insights. I’m not talking about vendors that promise you self-service analytics without telling you how much work it will take to get you there. I mean companies that take raw data and provide metrics, machine learning APIs, and insights on the other side. Hundreds of these companies exist and they exist in every industry.

End Of Day 91

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

That's why we need to advocate for lean data practices: pull only the data you need, use it until you don't need, then discard it. By adopting lean data practices, we can minimize data waste and optimize our data operations.

From my experience, working at 8+ companies' codebases... yes. Yes yes yes yes yessssss.

And it's not even for a good reason. Most of the time it's just people wanting to make projects for the sake of making projects and they spin up new services or databases.