Companies continue to look for methods to gain near-real-time access to their data for analytics. Honestly, this has been ongoing since I got into the data industry a decade ago…and well before that.

One possible choice is a method called change data capture, also known as CDC. I have seen companies employ multiple ways to use CDC or CDC-like approaches to pull data from databases like Postgres or MongoDB.

This can range from using triggers to reading logs.

Of course, this focuses on the analytical component as many companies use CDC to replace or supplement traditional ETL/ELT.

But CDC can also be a great way to understand your database and its structure. Databases abstract much of what they do to manage and process large volumes of data quickly.

Before going down that rabbit hole, let’s dive into what CDC is and why companies use it.

What and Why CDC

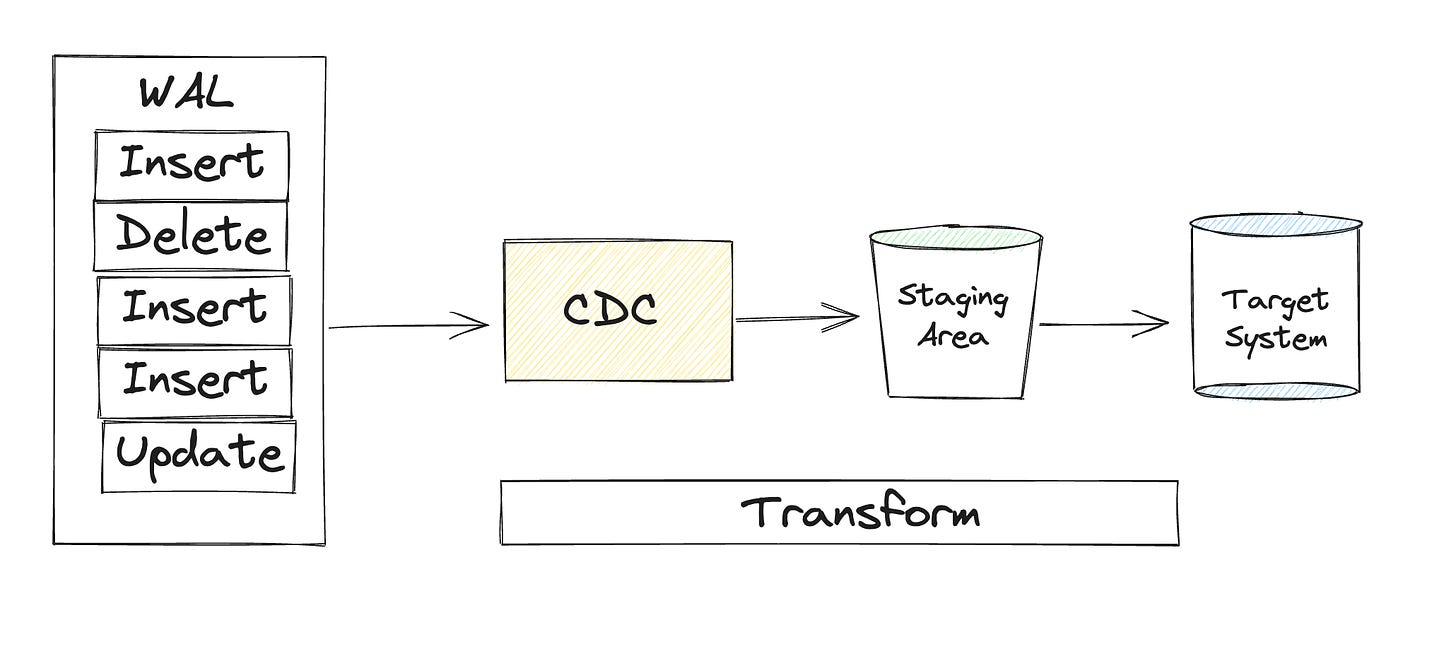

CDC’s goal is to capture the inserts, deletes, or updates occurring in a database.

In many databases, these changes are written to the write-ahead log. When these changes occur, there then needs to be a system that either pushes or pulls the data into your target analytical storage system.

That’s it.

You can stop reading this article.

Ok, in all seriousness.

Let’s go over a few ways CDC might be implemented.

Log-Based CDC

In my experience, I have seen several CDC implementations. The first is using the WAL; in this approach, the CDC tool (or service) monitors the transaction or redo logs generated by the source database.

These logs contain a sequential record of all changes made to the data, including the specific modified data and the type of operation performed (insert, update, delete). The CDC tool continuously reads and analyzes these logs, identifying and capturing the changes as individual events.

Once it captures these changes, the CDC tool applies the necessary transformations and formatting and then propagates the changes to the target system or systems. This replication process can occur in real-time or near real-time, ensuring that the target systems are updated with the latest data changes from the source.

Trigger-Based CDC

Another common approach to implementing CDC is using a trigger. This is pretty straightforward to understand if you’ve worked with databases. In this method, you use database triggers, which are like stored procedures that are executed automatically in response to specific data modification events.

When a data change occurs in the source database the corresponding trigger is fired.

Boom.

The data is now in your data warehouse or data lake.

Of course, both of these methods assume that you have some system that can also ensure that all the data that is processed from your source makes it to the destination while also scaling.

That's easier said than done.

So besides the what, the more important question is, why.

Why Use CDC

Throughout the history of data engineering, batch data processing was the way to go.

It’s easy to implement, easy to understand, and honestly answers most of the data questions and problems that are thrown our way.

But there are plenty of reasons data teams may look to CDC to try and either replace or supplement their current data processes.

Here are just a few:

Real-time operations - CDC enables real-time streaming of data changes. Instead of relying on batch processing or periodic updates, CDC captures and propagates individual data changes as they occur. This real-time data can then be implemented into real-time operations. I will provide a few examples below. But commonly this involves managing live operations more effectively or automating decision-making.

Historical data preservation - Many ETLs simply take a snapshot of the current dataset at a specific time, usually midnight. This means that if there were any changes to a row outside of that time period, there is some chance the data might get lost. CDC, on the other hand, maintains a historical record of data changes.

Reduced impact on system resources - The key principle of CDC is that it transfers data in tiny increments rather than bulk loads. This allows it to work in real time and can significantly reduce the impact on system resources that would otherwise be over-consumed during bulk loading. There is always a trade-off here like any other engineering decision. So this is somewhat dependent on how you’ve set up your databases.

Some Terms And Tech You’ll Need To Know

Before diving into a few solutions I have used I wanted to cover write ahead logs and Debezium as you’ll hear both of these terms thrown around a lot when referring to CDC.

Write Ahead Logs - WAL

The main functionality of a write-ahead log can be summarized as:

Allow the page cache to buffer updates to disk-resident pages while ensuring durability semantics in the larger context of a database system.

Persist all operations on disk until the cached copies of pages affected by these operations are synchronized on disk. Every operation that modifies the database state has to be logged on disk before the contents on the associated pages can be modified

Allow lost in-memory changes to be reconstructed from the operation log in case of a crash.

Source: Database Internals : a deep dive into how distributed data systems work

A write-ahead log (WAL) is a crucial component of many database systems that ensures that the data inside of a database is ACID (Atomicity, Consistency, Isolation, Durability).

The main idea of the WAL is that changes to data are first recorded in this log before they are applied to the actual database.

Why?

In case of a database crash or a power failure, the system can use the WAL to "replay" the recorded transactions upon restart, thereby recovering the database to a consistent state.

It's important to note that the specifics of how the write-ahead log works can depend on the particular database system. For example, in PostgreSQL, the WAL is a set of log files containing information about all changes made to the database's data files. This can include changes to table data and to the database's schema.

One of the reasons I do enjoy the concept of CDC is that it can be a great gateway into helping new engineers gain an understanding of how some databases actually work.

Debezium

Debezium is a powerful, open-source distributed platform that facilitates the creation of robust streaming CDC pipelines. This system is typically used in conjunction with the Apache Kafka streaming framework, although its use is not strictly mandatory. Debezium streamlines the process of connecting database changes, such as Postgres, to Kafka by significantly reducing the need for extensive manual coding.

These changes, also known as events, are then transported into Debezium, propelling them into Kafka in real-time. One of Debezium’s key strengths is its ability to maintain the exact sequence of events as they were generated. This is crucial in applications where the order of transactions matters, such as financial or inventory management systems.

Utilizing Debezium for CDC can be difficult since it often requires Kafka, Kafka Connect, and ZooKeeper to be running. In turn, if you’re a data engineering or analytics engineering team, you may need help from your DevOps team or a third party to spin it up successfully.

Solutions I have Implemented For Clients

Debezium - One of my earlier projects involved streaming logs for a CI/CD platform to be processed for analytics. In order to keep up with the sheer volume of data we used Debezium to connect to the Postgres instance. Actually this article does a good job of covering the basics for those that want to learn more.

Estuary - I recently implemented Estuary for a telecom client as it was both easy to use and affordable. Unlike many other solutions, there really isn’t any set-up so it’s very much a point and click solution(although they do have a free self-managed option). They also had no problem processing the amount of data we needed.

Streamsets - I came across Streamsets two years ago and helped a client implement. They needed to have real-time information about what was happening in their multiple manufacturing sites and had been trying another open-source solution out but it was too slow.

Despite all these fancy terms and solutions, don't let CDC scare you. It can feel like a complicated process (which it can be), but overall, between new tools coming out attempting to simplify the process and the fact that not every workflow needs to be near-real time, you may not need to spend too much time on it.

Capturing Change

The term change data capture can occasionally come off a little “market-y.” But it also does a good job of describing what is occurring in the process. If data changes, CDC processes will capture it.

How companies implement CDC may vary, but the end goal is often similar. They’d like to be making some form of near-real-time analytics. This generally fits best with companies that are either making live operations decisions or have automated the decision-making process.

Of course, there are other reasons, such as needing to improve general performance. Overall, change data capture will likely continue to grow in popularity as new solutions and open source projects make it more approachable.

What Is Data Engineering - Why Is Data Engineering Important?

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

The Scoop #47: Datadog’s $65M/year customer mystery solved

Datadog’s mysterious $65M customer. In an earnings call, the observability company shared that a very large customer has reduced its spending – a whopping $65M contract. The internet has been speculating ever since about whether that customer is Coinbase. I talked with engineers at the crypto business to find the truth.

7 Data Engineering Projects To Put On Your Resume

Starting new data engineering projects can be challenging. Data engineers can get stuck on finding the right data for their data engineering project or picking the right tools.

And many of my Youtube followers agree as they confirmed in a recent poll that starting a new data engineering project was difficult.

Here were the key challenges they called out.

Identifying suitable data sets for the project.

Selecting the appropriate tools to employ.

Determining the subsequent steps once the data is acquired.

So to help inspire you I have collected several examples of data engineering projects that should help drive you forward.

End Of Day 83

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

Great article. Any thoughts on using AWS DMS? I'm working through an implementation using it now.