Will Snowflake Win In The Cloud Data Warehouse Race?

And Resume Reviews By A FAANG Engineer

Scrolling through Linkedin, I continue to find other data engineers who echo the same sentiment I have when it comes to cloud data warehouses.

That is to say that Snowflake and Bigquery are dominating the market.

But here is the thing, According to Slintel, Amazon Redshift is still dominating the market with 26.36%( of course it doesn’t take much searching to find completely different numbers and ranking on different sites for the same few products).

So perhaps that point is moot.

What isn’t moot is the underlying current among data professionals who have had a chance up to use Snowflake.

Many seem to enjoy it?

The question is.

Why?

What makes Snowflake unique compared to all the other data warehouse options. I find that Snowflake always seems to be ahead in terms of features. In addition, Snowflake works the way I think a data warehouse should work. Most cloud data warehouses have many nuances that require developers do need to be pretty comfortable with them to really take advantage of their performance improving attributes.

What Is Snowflake

Snowflake is a cloud data platform. To be more specific it’s the first cloud built data platform. Its architecture allows data specialists to not only create data warehouses but also cloud data lake-houses because it can manage both structured and unstructured data easily.

Being that Snowflake is based in the cloud, this means that it allows developers to take advantage of elasticity and scalability without worrying about things like high up front costs, performance, or complexity of managing the system.

One Snowflake Features I Really Enjoy - Tasks

An old fashion way to create ETL jobs was to create a stored procedure and then set up some form of external code, cron job or SQL Server agent to run said procedures.

But why?

Why couldn’t we just schedule the stored procedure?

Well, Snowflake has the answer with tasks. Snowflake tasks allow data engineers to set up tasks that can run queries or stored procedures at specific times using a cron like timing reference. Without code or external technologies.

So Why Use Snowflake?

Cloud Agnostic

Snowflake is not limited to one specific cloud provider. Instead, companies can seamlessly scale their data warehouse across Amazon Web Services (AWS), Microsoft Azure, and GCP.

Meaning companies don’t need to spend time setting up hybrid cloud systems.

Performance and Speed

Snowflake is built for performance. Starting with the underlying infrastructure that is built to run analytical queries. To add to that the elastic nature of the cloud means if you want to load data faster, or run a high volume of queries, you can scale up your virtual warehouse to take advantage of extra compute resources.

User-friendly UI/UX

Snowflake’s UI/UX is easy to use and also very feature-rich. It’s easy to find old queries you have run, or even other user queries that are running(depending on your access level).

There are also a whole host of other features that are button clicks away rather than hidden behind settings and configuration drops downs that you may not even know exist. This makes Snowflake very user friendly and I often find that many analysts are finding working with Snowflake very easy.

Reduced Administration Overhead

Cloud services often require less administration overhead because companies don’t need to manage hardware and often can take advantage of scaling. In addition, Snowflake is a SaaS and this means set-up, updates, and general management is mostly managed by Snowflake and not your company.

Much of this management and scaling can be done by anyone with the proper rights without much knowledge in terms of servers, command lines, or coding.

This isn’t to say that you should just quickly click around and switch server sizes. You may find yourself suddenly paying a much higher bill.

On-Demand Pricing

Snowflake offers on-demand pricing, meaning that you will only pay based on the amount of data you store and the compute hours/minutes you use. Unlike a traditional data warehouse, Snowflake also gives you the flexibility to easily set up the idle time so you don’t need to pay if the warehouse is inactive.

Support Variety of File Formats

Data doesn’t just come in CSV, XML, pipe-delimited, and TSV anymore. In turn, Snowflake supports the structured data as well as a wide variety of semi-structured data including Parquet, Avro, and JSON.

Snowflake Continues To Be A Game Changer

Snowflake provides companies the advantage of using a cloud data warehouse that was developed for the cloud. Meaning, much of its infrastructure and design was made to be optimized for cloud operations.

Whether it be its natural data lake-house capabilities or its ability to scale easily depending on the query, Snowflake continues to be a game-changer.

Snowflake, I think, just has that “it factor”. It’s got a great UI/UX, making it easy to use, and just keeps implementing features that data engineers and architects want.

Ask A Data Consultant - Office Hours

Every newsletter I open up a day or two with a few slots for open office hours where my readers can sign up and you can ask me questions. I got to answer a lot of great questions so far and hopefully, they helped provide a lot of insights for those who signed up.

Sign Up Below:

Next Open Office Hours

Sign Up For My Next Office Hours on August the 5th at 8 AM - 10 AM PT or between 5 PM - 7 PM PT

Thanks To The SDG Community

I started writing this weekly update more seriously about 6-7 weeks ago. Since then I have gained hundreds of new subscribers as well as 8 supporters!

And all I can say is, Thank You!

You guys are keeping me motivated.

Every read, comment, like and financial subscription is amazing and I really appreciate all you.

If you want to help support this community consider clicking the link below.

Video Of The Week: Reviewing Your Resumes. We are At Well Over 6,000 Subs!

Many of you have asked me to review your resumes.

As a thank you for helping me blow past 5k and being active members of the community, I wanted to look through and give some comments. I got multiple resumes and I do think I reviewed everyone that I got ( I apologize if I missed them).

Again, I don't think I can say thank you enough.

Honestly, back in March I just wanted to hit 2k and now we are over 6k! So let's keep it up. I have so many videos I want to make and share with you guys!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

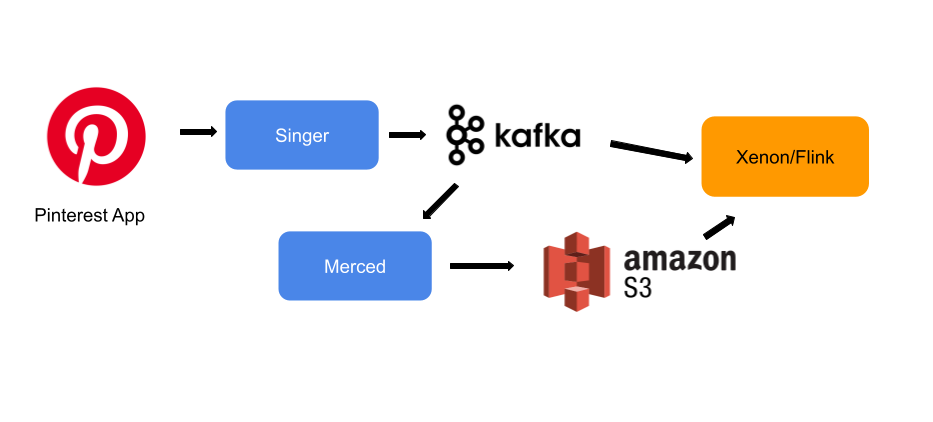

Unified Flink Source at Pinterest: Streaming Data Processing

To best serve Pinners, creators, and advertisers, Pinterest leverages Flink as its stream processing engine. Flink is a data processing engine for stateful computation over data streams. It provides rich streaming APIs, exact-once support, and state checkpointing, which are essential to build stable and scalable streaming applications. Nowadays Flink is widely used in companies like Alibaba, Netflix, and Uber in mission critical use cases.

Xenon is the Flink-based stream processing platform at Pinterest. The mission is to build a reliable, scalable, easy-to-use, and efficient stream platform to enable data-driven products and timely decision making. This system includes:

Reliable and up-to-date Flink compute engine

Improved Pinterest dev velocity on stream application development

Platform reliability and efficiency

Security & Compliance

Documentation, User Education, and Community

Query Snowflake using Athena Federated Query and join with data in your Amazon S3 data lake

If you use data lakes in Amazon Simple Storage Service (Amazon S3) and use Snowflake as your data warehouse solution, you may need to join your data in your data lake with Snowflake. For example, you may want to build a dashboard by joining historical data in your Amazon S3 data lake and the latest data in your Snowflake data warehouse or create consolidated reporting.

In such use cases, Amazon Athena Federated Query allows you to seamlessly access the data from Snowflake without building ETL pipelines to copy or unload the data to the S3 data lake or Snowflake. This removes the overhead of creating additional extract, transform, and load (ETL) processes and shortens the development cycle.

In this post, we will walk you through a step-by-step configuration to set up Athena Federated Query using AWS Lambda to access data in a Snowflake data warehouse.

For this post, we are using the Snowflake connector for Amazon Athena developed by Trianz.

Feature Engineering at Scale - Databricks

Feature engineering is one of the most important and time-consuming steps of the machine learning process. Data scientists and analysts often find themselves spending a lot of time experimenting with different combinations of features to improve their models and to generate BI reports that drive business insights. The larger, more complex datasets with which data scientists find themselves wrangling exacerbate ongoing challenges, such as how to:

Define features in a simple and consistent way

Find and reuse existing features

Build upon existing features

Maintain and track versions of features and models

Manage the lifecycle of feature definitions

Maintain efficiency across feature calculations and storage

Calculate and persist wide tables (>1000 columns) efficiently

Recreate features that created a model that resulted in a decision that must be later defended (i.e. audit / interpretability)

3 Heads Of Data And Founders Perspective On Where Data Is Going

2021 is almost halfway over and it seems like hundreds of millions of dollars has gone into investing in data, data start-ups and machine learning.

In particular funding has also shifted heavily from just focusing on the data science and machine learning space to the data engineering and data management space.

Of course, if you’re a AI based data management company, then I am sure you will be rolling in funding.

But, let’s look to see what other data experts have to say.

We asked people from various parts of the data world to provide their insights into what they see for the rest of 2021 and the quickly coming 2022.

Whether that be new start-ups, technologies or best practices.

Let’s see what they had to say.

End Of Day 13th

Picking the right cloud data warehouse is an important decision.

There are so many options and it does seem like Snowflake is becoming more popular and a great option.

After consulting on many projects, I continue to enjoy working with the product. It’s not to say that it’s perfect and there aren’t reasons to use other data warehouse solutions. Only that I have fully enjoyed the experience.