Which Managed Version Of Airflow Should You Use?

Google Cloud Composer Vs. MWAA Vs. Astronomer - Community Update #27

Over the last 3 months, I have taken on two different migrations that involved taking companies from manually managing Airflow VMs to going over to using Cloud Composer and MWAA (Managed Workflows For Apache Airflow).

These are two great options when it comes to starting your first Airflow project. They help reduce a lot of issues such as scaling workloads across workers and managing all the other various components required to run Airflow at scale.

Of course, both Cloud Composer and MWAA are services provided by two of the larger cloud providers. But there is a third option called Astronomer.

In this article, I want to discuss some of the benefits and limitations of Cloud Composer and MWAA as well as discuss why Astronomer might be a better fit for many companies.

Why Use A Managed Instance Of Airflow?

Maintenance and management of services and databases often hold many data teams back. We already deal with daily pipeline failures due to the engineering team updating that one column or removing a table altogether without telling us.

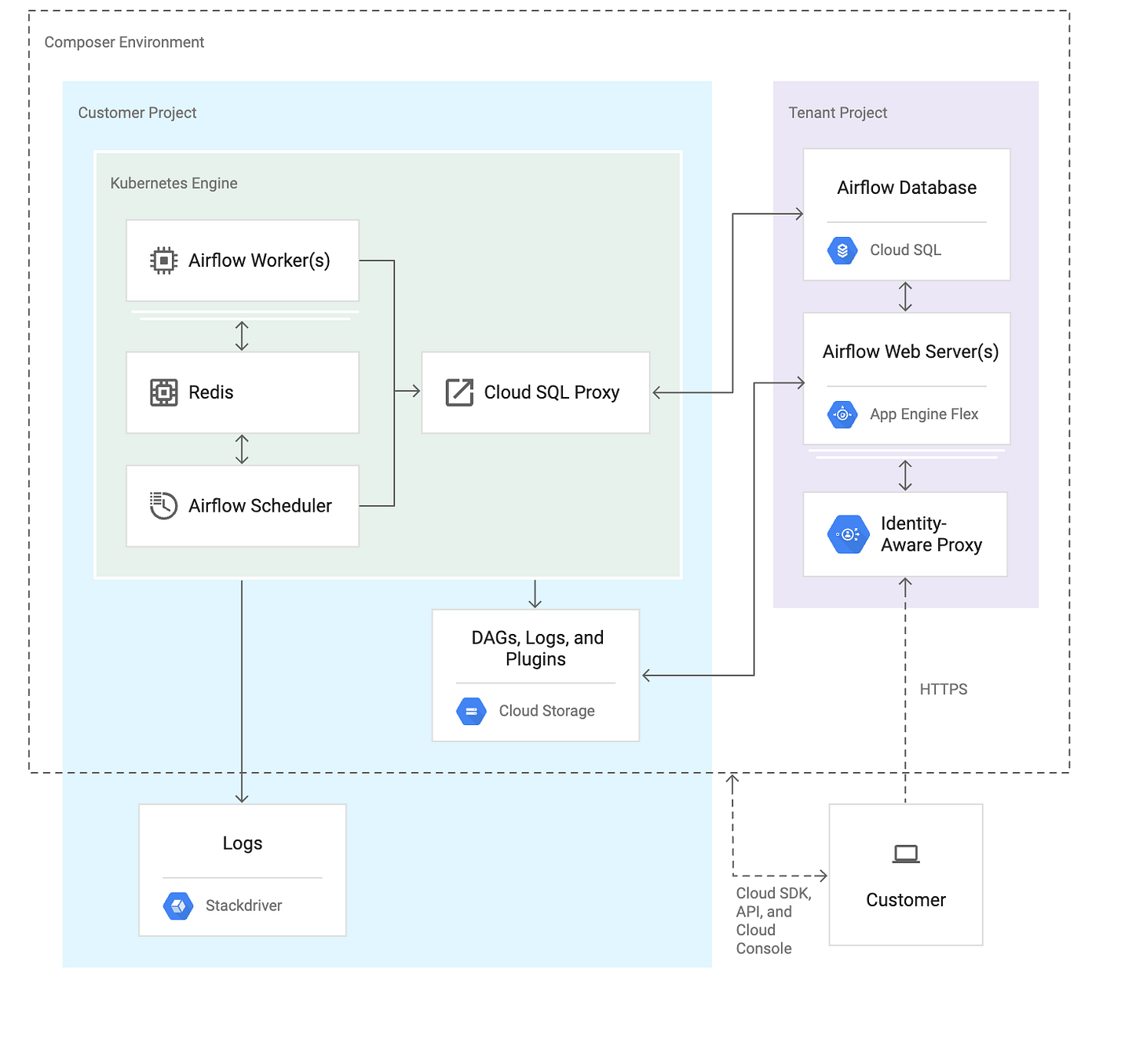

On top of that, trying to manage your Airflow instance generally means you need to have a meta database like Postgres, Redis, a logging location like S3, and several other components to manage your workers as they scale.

Each of these components increases technical complexity and the opportunity for failures. If your team is small or just needs to prioritize other work, then managed versions of Airflow are often a good choice.

But which managed Airflow service works best for you?

Comparing Composer And MWAA to Astronomer

I want to start with the fact that this article may come across as very positive towards one specific product. The truth is if you’re just getting started. Cloud Composer and MWAA are great.

They can help set up a POC as well as an MVP without needing to set up too many external logistical components or agreements. Just click create an environment.

Where you will notice Astronomer shines is as you set up more complex jobs and need more flexibility. So let’s dive into some of the benefits and challenges you will face with all of these solutions.

Ease Of Set-Up

Without a doubt. Setting up MWAA or Cloud Composer is easier than setting up Astronomer. Really.

It’s just a few clicks and you have a fully managed Airflow instance that is easy to scale at your fingertips. It does take about 20-30 minutes to wait for the entire system to spin up.

Astronomer takes a little more lift. But personally, it’s still far easier than trying to set up your own VMs to scale.

Overall, what you gain in ease of set-up you will lose in customizability.

What You Lose In Ease Of Set-Up You Gain In Customizability

Solutions are all about trade-offs. What Cloud Composer and MWAA give you in ease of set-up they take away in the difficulty of editing much more than your Python libraries.

I found this out first hand as I migrated a project over to Cloud Composer. My first question. How do I update my docker image?

Of course, there were other issues such as adding in drivers for older databases, and other plugins that were less straightforward. What might seem like an easy to solve problem like installing a driver can become very taxing and require complex workarounds.

At the end of the day your team will need to assess their overall goals in order to know whether they need a lot of flexibility or need to deploy DAGs fast.

Docker Image Limitations

One of the biggest challenges you will run into when you are working with Cloud Composer or MWAA is you won’t be able to go into the docker image that is being created and edited.

This is fine if your DAGs are very simple and don’t require complex drivers or external dependencies. However, as soon as your DAGs require complex technical dependencies you will likely have to perform a lot of workarounds.

For example, I saw a StackOverflow answer where a developer had to go to the cluster running the VM and manually force certain components to run on it. Which kind of defeats the purpose of Docker if you now have a manual step you will need to remember in future deployments.

Now let’s compare that to Astronomer.

How would you customize the image of your Docker image?

Easy, here is an article on the topic. Yes, Astronomer has a steeper learning curve, but the trade-off is that you will have the ability to perform much more complex integrations with other systems to your Airflow instance. Like integrating with DBT or including an IBM driver file.

All easy to do.

Executor Limitations

When it comes to how you run your Airflow instance, Executors are a key component in determining how jobs are managed. Choosing the wrong Executor means your system may fail to scale and it won’t be able to run your jobs fast enough.

The problem is in some cases you might find that you want to use a very specific Executor. Astronomer lets you use the Local, Celery and Kubernetes executors. In comparison MWAA only supports the Celery Executor and Cloud Composer also has many blocked configurations. This limitation of executors has become a major pain-point for some companies who are having to create massive work-arounds in order to run their jobs the way they need to with their managed service.

In the end, this can be very limiting depending on how you want to deploy your DAGs.

Astronomer Works Closely With Airflow

To also add to the many benefits of Astronomer. I will say that they focus on Airflow a lot. They contribute heavily to the Airflow code base as well as work closely with the Airflow team in general.

This leads to many of the Employees being experts in Airflow. They work heavily to understand the current needs of their end-users and truly have a great understanding of best practices when it comes to Airflow. The difference is that Astronomer is Airflow only.

They aren’t looking to sell you on S3 or Cloud Storage. Instead, they want to figure out how to best deliver Airflow as a service.

In contrast, AWS and GCP focus on everything. Meaning their product will always lag. This is notable as MWAA is still back on 2.0.1 while Airflow had been updated to 2.2.0. which Astronomer can support.

If you call an AWS solutions architect to give you advice on how to better set up your MWAA, then you might just get generalized advice. It’s hard to be a master of every tool and solution AWS has to offer. Again, compared to Astronomer that focuses only on Airflow.

Astronomer is always step in toe with Airflow updates and they are usually ready to utilize the most recent version of Airflow almost immediately.

When To Use What

MWAA and Cloud Composers are great solutions when you have pretty simple DAGs that you want to build. You don’t want to involve too many extra components and it’s honestly a great place to start your Airflow journey. If you’re trying to build use for Airflow or just trying to prove that it works, then go ahead, build it on Composer or MWAA.

Of course, more than likely, as your company grows and you have more complex needs, you might find yourself needing to migrate to Astronomer. Also, if you work in an industry that has tighter regulations, requirements around private PyPI setups, or if it's important to have more fine-grained access and controls then Astronomer shines here as well.

I am a little surprised that AWS and GCP have made it so difficult to update your Docker Image or customize too much in terms of launching your Airflow instance. Perhaps they just want to make sure the tools are just as optimal as possible.

Also, it is just easier to launch.

Finding the right version of managed Airflow doesn’t have to be hard. Start by looking at what use cases you have now, and what the future holds. You should be able to easily start with a tool like Cloud Composer and then eventually switch to Astronomer. You will lose out on some of the best practices. But on the flip side, you will already have some of your use cases set up and then can work with their team to improve your DAGs as you go.

Video Of The Week - Things I Wish I Knew When I Started As A Data Engineer

Data isn't perfect.

That's just one of many things I wish I knew before I started working as a data engineer.

The same could be said about being an analyst or data scientist.

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

5 Difficult Skills That Pay Off Exponentially in Programming

If you are a good-grasper and a productive coder, great! You can impress people in no time. Your career graph will be surely remarkable.

But age-old wisdom says that there are things in life that must be taken at a slow pace. They might be difficult to learn.

But when you master them, you command a position no one can take away. When done right, such things create drastically positive changes in your life.

How to Perform Speech Recognition with Python

Speech Recognition, which is also known as Automatic Speech Recognition or Speech-to-Text, is a field that lies in the intersection of Computer Science and Computational Linguistics that develops certain techniques enabling computer systems to process human speech and convert it into textual format. In other words, speech recognition methodologies and tools are used to translate speech from verbal format into text.

The best performing algorithms used in the setting of Speech Recognition utilise techniques and concepts from the fields of Artificial Intelligence and Machine Learning. Most of these algorithms improve over time as they are capable of enhancing their capabilities and performance through the interactions.

Machine Learning Experiment Tracking

At first glance, building and deploying machine learning models looks a lot like writing code. But there are some key differences that make machine learning harder:

Machine Learning projects have far more branching and experimentation than a typical software project.

Machine Learning code generally doesn’t throw errors, it just underperforms, making debugging extra difficult and time consuming.

A single small change in training data, training code or hyperparameters can wildly change a model’s performance, so reproducing earlier work often requires exactly matching the prior setup.

Running machine learning experiments can be time consuming and just the compute costs can get expensive.

End Of Day 27

Thanks for checking out our community. We put out 4 Newsletters a week discussing data, tech, and start-ups.

If you want to learn more, then sign up today. Feel free to sign up for no cost to keep getting these newsletters.

I made a straw poll in the locally optimistic airflow channel about these options, results were

A - How is Docker deployed (n =24)

Using Managed Airflow Services 46%

Using Production Docker Images 29%

Using 3rd-party images, charts, deployments 17%

Using Official Airflow Helm Chart 4%

Using PyPI 4%

B - Which managed services used (n=17)

GCP Cloud Composer 47%

Astronomer 35%

AWS MWAA 18%

I was surprised so many are not using managed services!

We're running Airflow inside our Kubernetes cluster, and I tell you, it's not so hard to manage and scale. So we never made a decision to move to Composer.