What Is Databricks Unity Catalog

And Why You'll Be Forced To Migrate

Today we have a guest post from Daniel Beach. What I enjoy about Daniel’s articles is that he’ll go deep into topics.

Whether it’s Polars or Delta Lake.

Or in case of this article, Unity Catalog.

With Databricks going (forcing) all in on Unity Catalog, along with their dominance in the AI and Machine Learning space, it’s probably time you get up to speed on Unity Catalog before you’re left in the corner sorting rocks for the rest of your life.

It’s easy to get left in the proverbial dust, wondering what happened when it comes to fast-moving technology, and Unity Catalog from Databricks is one of those tools.

It’s evolving quickly with new features being added almost monthly, and it’s at the core of Databricks along with their announcement of retiring Standard Tier, it’s all in on Unity Catalog or nothing.

So, today we want to keep you out of the rock-shuffling house, and give you a crash course on …

What is Unity Catalog in the Databricks context?

General features etc.

How to “migrate” workloads to Unity Catalog.

Let’s dive in head first.

Databricks Unity Catalog

What is it???

“Databricks Unity Catalog offers a unified governance layer for data and AI within the Databricks Data Intelligence Platform” - Databricks

As someone who’s used and poked at Unity Catalog, I would say this definition sort of gets at Unity Catalog but doesn’t really get the full picture.

How so? Unity Catalog has a plethora of other features and concepts besides governance (which is important).

What else can we add to the list?

Governance of data and “objects” (we can get into this later).

End-to-End Machine Learning lifecycle features and products

(think MLFlow, Model Endpoints, Feature Stores, etc.)

Collaboration tool for all this data (sharing, etc.).

Monitoring and Observability.

And probably more to come, it’s being developed and extended quickly.

Today, in Part 1, we will mostly try to introduce the concepts of Governance and Permissions.

I think it’s important to think about Unity Catalog as a whole, in the context of Databricks over the years. What made Databricks a big deal in the first place?

I would argue it did what EMR on AWS didn’t do.

Spark is easy and approachable.

Collaboration and Development made easy for non-engineers (think Notebooks)

Simplicity of infrastructure and development.

Clearly Unity Catalog was the next logical progression for Databricks as they continued to push their platform and offering forward. No surprise that “Unity Catalog” (an amalgamation of the best Databricks has to offer) is now the new standard.

Just in case you are unfamiliar with Databricks and some of the offerings we’ve been mentioning, below are links to some of these Unity Catalog features and tools for you to read through at your leisure.

Migrating to Unity Catalog

Probably one of the hardest parts, surprisingly, of migrating or moving to Unity Catalog if you are new to it, is actually the Governance part of the job … aka the permissions.

Why is setting up Permissions and Controls for Unity Catalog the hardest part you ask? Because it requires a lot of high-level design and discussions before you dive into migrating data and setting up the environment.

Think about it like this, in Unity Catalog object control and governance is the core of what it is and does. This means before you set up the environment you need to know exactly what your plan is for how people will access AND interact with the ENTIRE environment.

Making it more clear.

I know this may not make sense to you yet, but let's list some of the core components of a Unity Catalog setup, and you will see what I mean.

Workspace

Catalog

Schemas

Objects (like tables)

Groups

Users

Service Accounts

Permissions (GRANT … who can do what)

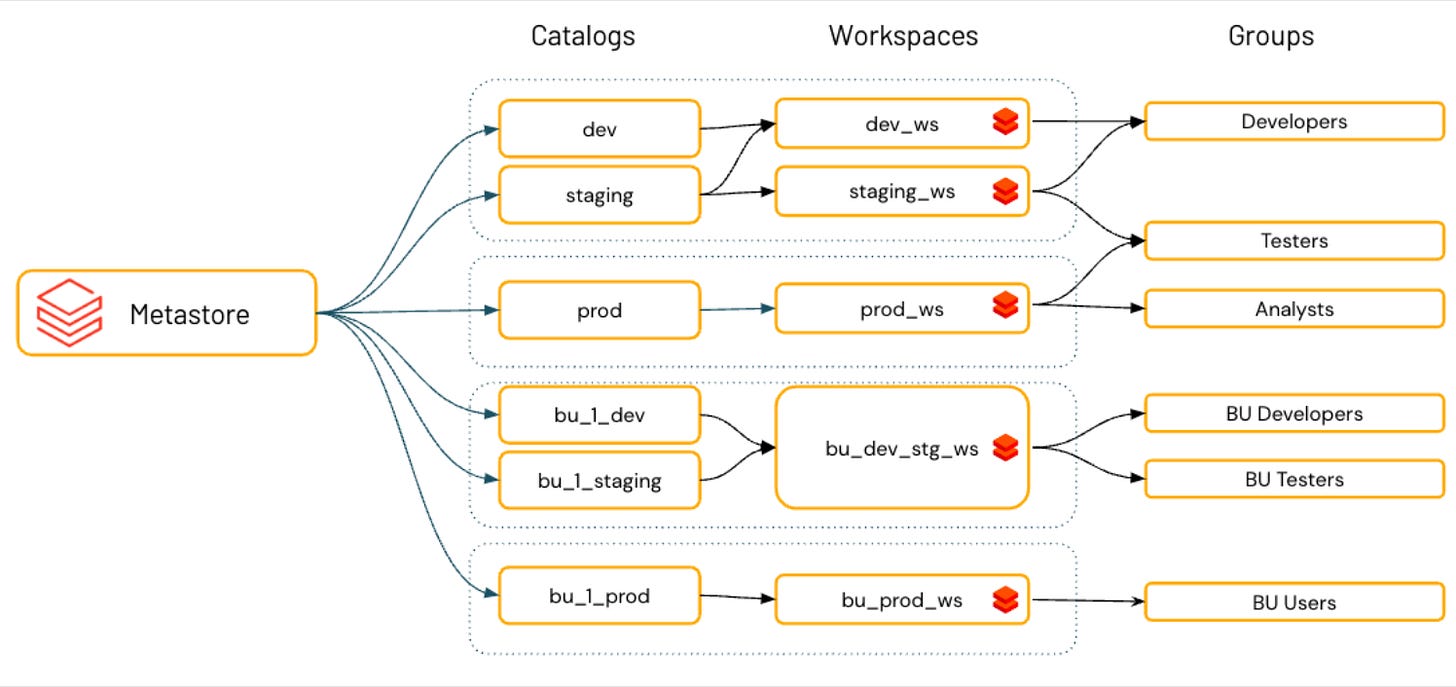

Below is a very simplified view of one possible setup for all the components above sitting inside an actual Unity Catalog implementation.

Of course, this is a made-up view, and you can change up the implementation and relationships of the components to meet your needs.

Note the relationships between some of the concepts we listed above.

What does that all mean? It means you will actually have to spend an inordinate amount of time UPFRONT laying out your desired system and reviewing each component and piece with stakeholders and others to ensure you’re going down the correct path.

Do you have enough Workspaces?

Who should be in what Workspaces?

How many Catalogs do you need?

What is contained in those catalogs?

What kind of Groups do you need to set up?

Who’s in those groups?

What permissions do certain Workspaces or Groups have?

Do you have service accounts?

What do the service accounts use and need access to do?

Who can do what or see what?

It’s critical to answer these questions up front and design a system that works for your company and use case!

Reviewing each component.

It would probably help at this point to connect the dots and give a brief “academic” description of the major components we showed you above.

“A workspace is an environment for accessing all of your Databricks assets. A workspace organizes objects (notebooks, libraries, dashboards, and experiments) into folders and provides access to data objects and computational resources.”

aka you “log in” to different Workspaces and will see what that Workspace has access to.

Catalog

“A catalog contains schemas (databases)…”

You can … “limit catalog access to specific workspaces…”

Schemas

Main holding spot for tables. “Schemas (databases), are groupings of data (tables and views), non-tabular data (volumes), functions, and ML models.”

Read more here. (You can also control permissions at this level).

Objects (like tables)

Groups

Groups of Users and other accounts.

Users

The lowest common denominator.

Service Accounts

Probably running things like automated Job runs etc.

Permissions (GRANT … who can do what)

The actual granular level of controlling permissions.

example …

GRANT privilege_type ON securable_object TO principal;

GRANT USE_CATALOG ON CATALOG production TO engineering_team;What it boils down to.

What it all comes down to is permissions and object control. You really have to decide what your system will look like … it starts with high-level decisions about Catalogs and Workspaces, and how they will be the overarching controls of how people access the system and objects.

Do you want to know the bad part?

We haven’t even really peeled back the layers of Unity Catalog to talk about all the Objects inside that can have, and will need, permissions applied to them.

Each one of the above objects can have permissions applied to them, all in the context of your …

Workspaces

Catalogs

Groups

How should I set up my Unity Catalog?

This is a good question and one I struggled with myself in the beginning, although Databricks lists some Best Practices, they are mostly vague and general.

Below are a few screenshots from Databricks that probably give a view of what they generally recommend, again, they don’t give much as for exact setups, as that varies greatly based on the needs.

Here are some ideas to note that you will find reference to in Databricks documentation, and things I’ve implemented myself in Unity Catalog.

Catalogs are a great way to keep the separation between environments (like Dev and Prod).

You can tie different physical storage locations per different catalogs (for example, Dev and Prod have different s3 buckets).

Workspaces are closely related to Catalogs and are the “View” of what a “Group(s)” of persons can see and have access to.

Groups are the best way to think about and manage the permissions of actual users.

Again, so much of the initial setup and design of a Unity Catalog is totally dependent on the size and needs of the company. But, you can generally assume the following.

You will probably have different Catalogs for Development, Production, etc.

You will probably have different Workspaces to control at the highest level, which Group(s) of people can see and access what objects.

You will probably have Groups like Engineering that will have elevated privileges to schemas, and User Groups that will have very little (SELECT) access to data.

While this might seem “straightforward” on the surface … remember that we are giving the 10,000-foot view. Most real-world implementations include many different types of data, schemas, storage locations, groups, needs, Cluster permissions, etc.

It will absolutely get complicated fast and you don’t just want to “dive in” and figure it out. Spend the time to “map” and “draw” out the high-level structure first, as we’ve seen above a few times, before going down the road of implementation in Unity Catalog, because once made, these decisions can be hard to back out of.

Thanks For Reading!

Join My Data Engineering And Data Science Discord

If you’re looking to talk more about data engineering, data science, breaking into your first job, and finding other like minded data specialists. Then you should join the Seattle Data Guy discord! We are close to passing 6000 members!

Join My Data Consultants Community

If you’re a data consultant or considering becoming one then you should join the Technical Freelancer Community! I recently opened up a few sections to non-paying members so you can learn more about how to land clients, different types of projects you can run, and more!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Tools to reduce tech debt in your project

By Bhavana

Once upon a time, our team inherited a horrible mess of a legacy project - let’s call it Project Mammoth. It had all the elements of a project with a tech debt problem - spaghetti code, no automated testing, tightly coupled components that were leaking abstractions like sieves, and the list goes on. There was a plan in place to replace the application, but Mammoth needed to stay alive and functional for at least a couple of years until its replacement got built and gradually rolled out.

Why Low-Code/No-Code Tools Accelerate Risk

Creating systems and automated workflows has often been viewed as an engineering team’s responsibility. However, I continue to run into companies that have more and more teams creating integrations and workflows with low code and no code tools. Some may act as if Low-Code/No-Code is some new invention, but it's far from it. We’ve had solutions like Excel…

End Of Day 127

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

Interesting post thanks, I wondered Unity as a Catalog would be just list of data sources, but seems the name of a RBAC system at is core, but with added features it seems on data collaboration.

Thanks for the overview! I'm arguing for going forward with Microsoft Fabric instead of Databricks Unity Catalog. For a smaller company it seems simpler (in the best way) to go the Microsoft route.