Learning about how to use data models from basic star schemas on the internet is like learning data science using the IRIS data set.

It works great as a toy example.

But it doesn’t match real life at all.

Data modeling in real life requires you to fully understand the data sources and your business use cases, which can be difficult to replicate as each business might have its data sources set up differently.

For example, one company might have a simple hierarchy table that can be pulled from Netsuite or its internal application. But then another one might have it strewn across four systems, with data gaps, all of which need to be fixed to report accurately. So you’ll never really understand the challenge you will face when data modeling until you have to do it. Then you’ll be aware of how you should data model and all the various trade-offs you’ll have to make.

In this article, I wanted to discuss those challenges and help future data engineers and architects face them head-on!

So, let’s dive in.

What is Data Modeling?

There are plenty of definitions of data modeling that exist out there. For example:

Data modeling is the process of creating a simplified diagram of a software system and the data elements it contains, using text and symbols to represent the data and how it flows. Data models provide a blueprint for designing a new database or reengineering a legacy application. – TechTarget

Another great example came from my discussion with Joe Reis (co-author of Fundamentals Of Data Engineering), where he defined data modeling as:

Organizing and standardizing data to facilitate believable and useful information and knowledge for humans and machines.

Now, I’d say Joe’s focus is more on the overall goal, where as TechTarget describes the process. However, both together should provide a good picture of what data modeling is.

Challenges You Might Face in Data Modeling

Data modeling is an evolving practice with diverse challenges.

Here are some of the most common challenges in current data modeling projects.

Companies Use Diverse Data Modeling Techniques

In the past, most companies used similar data modeling strategies, often relying on a few tested models such as the Star or Snowflake schemas for analytics. There were, of course, dogmatic and religious-like debates between whether one should use Kimball (bottom-up) or Inmon (top-down). But overall, data modeling initially both had to appease the end-user as well as the limitations of physical servers. Thus, many data models were forced to adopt specific techniques either due to lack of space or computer.

Today, you encounter a wide range of data models, each functioning differently.

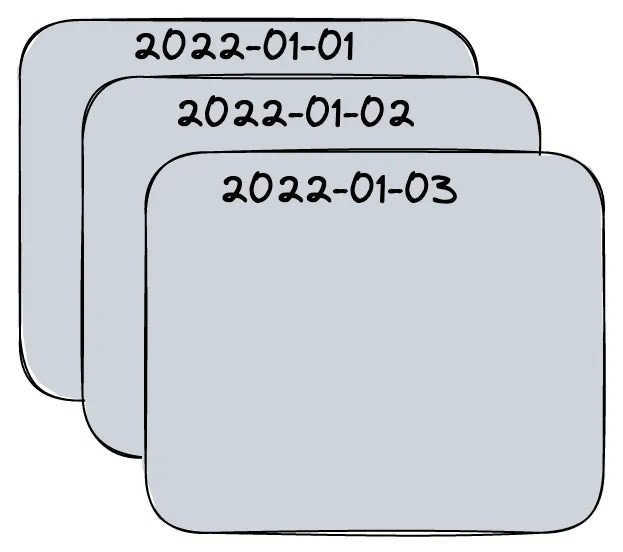

Some are forced due to the systems they are built on top of. For example, when I worked at Facebook, we relied on an HDFS-like storage layer, which didn’t allow us to run updates on our tables. Thus, in order to track changes in data, we’d just store each date as a snapshot. In other words, to see what occurred on a specific date, you had to filter by said date.

There are also discussions about One Big Table (OBT) vs. Kimball(although I often see both in use at the same time). Perhaps a more humorous example of this is Query Driven Data Modeling.

But each of these has pros and cons, and they must be considered before saying one solution is best over another.

That’s part of the job of a data engineer. Not just to model the data, but to create a layer of data that is useful to their end-users(and as Joe Reis has put it, machines as well).

Integrating Data

Data comes from diverse sources, making integration a significant challenge. At first glance, integrating data may seem straightforward as you create diagrams that show relationships between entities like Orders, Customers, Products, and Stores. However, the challenge lies in the fact that data can have multiple sources.

Take Customers, for instance. While you might assume all customer data comes from the same source, modern sales processes rarely function that way. You may need to integrate data from platforms targeting customers in different geographic locations. Additionally, platforms used for American sales may have other data sources and formats than those used for European sales.

Omnichannel sales strategies further complicate data modeling.

A company might sell products on its e-commerce website, Amazon, and retail store sites. These sources could provide different types of data about buyers. Even if they collect the same data parameters, the formatting may not align perfectly. One system might place the customer’s order in the second field, while another might put the customer’s address there. Without reliable integration, these conflicting fields can cause problems within your dataset.

It also can cause issues when it comes to reporting. This is why many data individuals joke when they reference, “What is a customer?” That can be defined differently by company and department.

Integrating Data Challenge Example

Another example I constantly use is when I worked in my first role, I was asked to integrate project management data with hourly tracking of contracts. Both points of data were from different sources. At first, when I was explained how the data was set up, I assumed the problem would be simple.

But it wasn’t.

Both systems were supposed to have an ID that made it easy to join data sets. However, one of the systems allowed the ID to be a free text field. I don’t think

I need to say more. Basically, there was no real way to join the data in its current state.

In the end, it’s up to data teams and their business counterparts to integrate data not only from a technical perspective but also from a business process perspective.

Translating Human Requirements Into a Data Model

Data models do not exist in isolation; they serve a purpose for the company and its users. However, translating human requirements into a data model presents a significant challenge.

This aspect of data modeling is especially difficult because it requires effectively communicating with people to understand their requirements and then translating them into a flexible data model that can evolve as needs change.

The challenge intensifies when company leadership pushes for a specific concept. For example, they may request consolidating all data into one giant table, which may not align with your data engineering knowledge. In such cases, you must find a way to appease leadership or convince them to use a model that better serves their needs. Neither option is easy to accomplish.

Getting Better at Translating Human Requirements Into Data Models

Translating human requirements into data models requires experience. Ideally, you can find a job that allows you to build on your basic data science skills. Building a good model involves asking the right questions and seeking answers. For example, “Must this model include a slowly changing dimension (SCD), or is it included out of habit or something an industry leader suggested?

Seek opportunities to work with highly experienced data professionals who can provide deeper insights into the questions you should ask before and during the data modeling process. The more you practice and receive feedback, the easier it will become. Still, expect to invest significant time in developing this skill; it only improves with practice.

Modeling Data Now and Into the Future

Change is inevitable, so anyone interested in data science must continuously learn new skills. What works well today may fall short tomorrow. Therefore, combine technical knowledge with creativity to find solutions to the data modeling challenges.

However, this is no easy feat.

Many companies rely on data consultants to help them develop models that work well in the present while providing flexibility for future needs. An experienced data modeling consultant can handle the most challenging aspects, making it easier for your team and company to thrive.

If you need help with your data model or data strategy, then feel free to set-up some time with me and my team!

Thanks for reading!

Low-Code Data Engineering On Databricks For Dummies

Modern data architectures like the lakehouse still pose challenges in Spark and SQL coding, data quality, and complex data transformations.

However, there's a solution. Prophecy.io’s latest ebook, "Low-Code Data Engineering on Databricks For Dummies," offers an insight into the potential of low-code data engineering. Learn how both technical and business users can efficiently create top-notch data products that drive ROI.

How the lakehouse architecture has transformed the modern data stack

Why Spark and SQL expertise does not have to be a blocker to data engineering success

How Prophecy’s visual, low-code data solution democratizes data engineering

Real word use cases where Prophecy has unlocked the potential of the lakehouse

And much, much more

Thank you Prophecy.io for sponsoring this weeks newsletter!

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Json -> Parquet. Python vs. Rust.

By

We know all good developers love a battle. A fight to the death. We love our files. We love our languages, and even when we know what the answer will be, it’s still fun to do it.

While this topic of JSON → Parquet may seem like a strange one, in reality, it was one of the first projects I worked on as a newly minted Data Engineer. Converting millions of JSON files stored in s3 to parquet files for analytics, so maybe it isn’t so far-fetched after all.

When dealing with massive datasets, the storage format can significantly impact performance. While JSON is an excellent medium for data interchange, Parquet, a columnar storage format, is more suitable for big data analytics due to its efficiency and speed.

Approaching Go-to-Market as a Data Engineer

By

Hubspot defines a GTM strategy as “a step-by-step plan designed to bring a new product to market and drive demand. It helps identify a target audience, outline marketing and sales strategies, and align key stakeholders. While each product and market will be different, a well-crafted GTM strategy should identify a market problem and position the product as a solution.” While most startups start from scratch, Chad and I have a unique set of advantages:

We collectively have an audience of over 100K followers.

We have years of data tracking our content engagement and conversions.

Chad manually kept a spreadsheet of every meeting he had and who converted to design partners for the product.

We have the technical skills to analyze this data and build data products with it.

How To Set Up Your Data Analytics Team For Success

Success in the data world hinges on team setup.

I’ve delved into onboarding and standards in previous articles, but never into the structure of data teams. Typically, there are three configurations: Centralized, Decentralized, and Federated. Most companies I’ve seen use a mix of these.

While the newest tech breakthroughs grab headlines, team organization is the unsung hero. A well-structured team boosts business impact, streamlines communication, and enhances information sharing among internal data units.

So, let’s dive into these setups and their real-world implications.

End Of Day 95

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.