How To Reduce Your Data Teams Costs

It’s easy to let costs run a little wild with data teams. In the last decade, the charge for many companies has been data insights and costs have taken a back seat. This has led to many companies spending hundreds of thousands if not millions on data initiatives. Even when these data initiatives fail.

Over the past year, our team has worked with several companies to gain a better grip on some of their major pain points from a cost perspective. Costs can be driven by complex systems, inefficient data workflows, and a lack of clear processes.

All of these add up to a lot of wasted time and resources.

So where can your team look to help reduce their data team costs? Especially in a market that is leaning towards improved balance sheets.

Let’s start with infrastructure.

Quick Pause: We need your help! Our team is putting together a survey to better understand the state of data infrastructure. We will be sharing our results in this newsletter. If you work in data(whether an analyst or VP of engineering) we would love to hear from you!

Simplify Data Infrastructure

The modern data stack has provided companies of all sizes with easy access to their data.

But with the term came large marketing efforts from start-ups trying to prove valuations and increase ARR. Some of these tools have helped simplify processes, while others have added more complications.

All in all, each of these licenses and consumption-based pricing models can start to add up. Not only in terms of billing but also in terms of the human hours and time required to manage all of these various solutions. Each solution unavoidably takes up a certain level of overhead per team.

To some extent, this is a natural process of building anything. In V1 your data infrastructure might be too complex because you’re just trying to get to a point where you can answer questions. So having complex data systems at first isn’t the end of the world.

However, taking a Build and Simplify approach can help reduce costs both in terms of licensing as well as overhead and maintenance. Over the long term, it's beneficial if teams continually look for ways to simplify their overall systems. By simplifying your data infrastructure(when needed) your team can reduce the amount of future maintenance and current overhead costs that are being incurred.

Overall, most data infrastructure set-ups are iterative both in terms of infrastructure and general data workflows.

Improve Data Workflows

Snowflake has allowed many companies access to a data platform that usually would require a six to seven-figure contract for as low as five figures. However, this cost-for-consumption model can quickly start to bite users as they start building more and more complex workflows.

Especially if your tables are configured incorrectly or you’re constantly running dashboards live that could rely on pre-aggregated data. This is not unique to Snowflake. Most cloud data warehouses can have sky-rocketing costs when they are poorly set up.

In the era of the DBA, we cared about the number of bytes in a column. Now we need to care about the number of gigabytes, terabytes, and petabytes being processed. They are directly leading to inflated cloud costs.

In the end, I have personally(as well as talked with others who also have) helped cut multiple companies' data warehouse costs by improving their underlying data structure.

To be clear, it’s not just me. Multiple consultants and newly minted tech leads have all informed me of similar situations(some of which have led to promotions). Often simply by improving some minuscule details in a company’s overall data workflow.

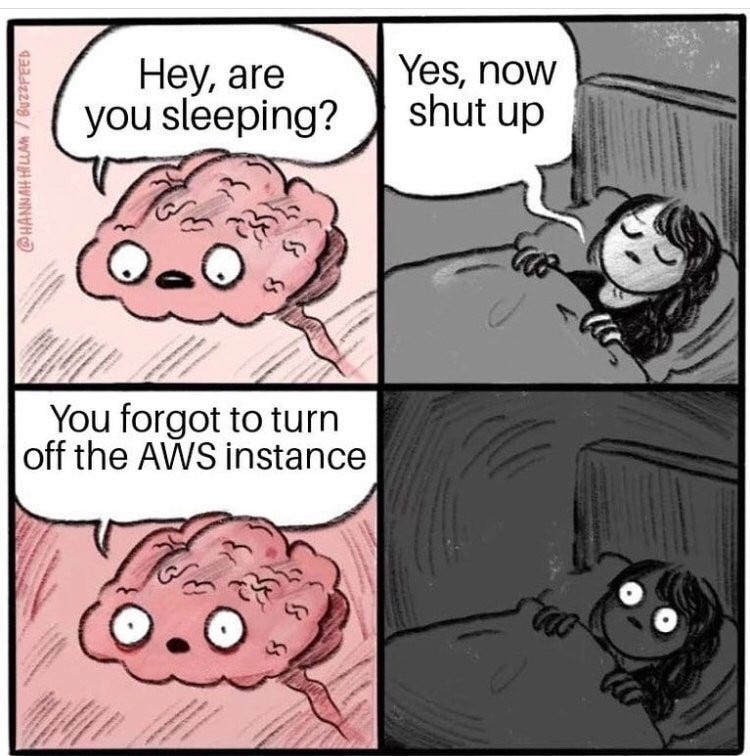

Make Sure You Don’t Have Random Services Running

Another common issue many teams run into is running services in cloud providers such as GCP and Cloud Composer that runs up costs, even if you don’t have any jobs in it.

Just recently I found an instance of GCP that cost a client $2000 a month because of the settings they had configured and 0 jobs were running. Meaning the client was spending $2000 a month for 0 value.

Random running services can be running everywhere. It could be EC2 instance that no one is using or Airflow jobs running queries for reports that no one looks at. These services can quickly add up and costs companies an inordinate amount.

Doing the occasional audit can help clear up unused services and infrastructure as well as reduce future tech debt. Truthfully, I am always surprised how often services can be running behind the scenes without anyone asking questions.

Set Up Clear Processes For People

Costs that are often a little less obvious revolve around onboarding, maintenance, and development processes. These costs aren’t always easy to calculate because no AWS bill or annual license renewal fee can be easily attached to said costs.

Instead, these costs are often hidden by time, business cycles, and employee attrition. For example, a major issue that leads to data strategies failing is the key person problem. Where a key person sets up the baseline data infrastructure for a company and then leaves. Perhaps they provided solid documentation, perhaps they didn’t.

Sometimes even with solid documentation, there is an unavoidable amount of human time lost to the new hire having to get their bearings in terms of what is running what and what data is being processed where.

This lack of clear processes and documentation adds up to future costs that again, aren’t always easy to track. So, having clear frameworks that a future developer can easily fit into and solid documentation can often provide an easier path forward.

Truthfully, the key person problem is probably the largest reason I have been called into most projects.

How Is Your Company Looking To Reduce Costs In 2022?

Data remains an important piece of many companies' strategies. It helps companies make better decisions and provides insights into what exactly is going on underneath the hood of operations.

But it’s very easy to see costs go wild with all the various solutions, data roles, and left behind services. Thus taking time every quarter or so to make sure that the budget is allocated towards cloud costs, Snowflake consumption, and solutions make sense.

Find those dashboards no on looks at and pipelines that provide no value anymore, consider getting rid of them and of course, make sure your processes are scalable and easy to transfer.

All in all, good luck out there data peeps.

Video Of The Week: How I Got My First Data Engineering Job

Getting your first job is hard.

Especially when it's in a field like data engineering. Every company wants 5 years of experience for entry level positions.

So the question becomes, how do you get your first data engineering job. In this video I will discuss that topic!

What would you do with a $20k pay day?

Join the Starburst Space Quest League (SQL), a gamified sweepstakes for data professionals, to enter for a chance to win amazing prizes. In SQL there are four sweepstakes over the course of the year. Once you enter, you’ll have access to a number of quests to test your data skills. The more quests you participate in, the more stars (tokens) you earn, and the higher your chance of winning the current grand prize of $20,000! Do you think you have what it takes? The winner will be announced on July 1st, 2022, check out the Starburst website to learn more and enter:

Very special thank you Starburst for sponsoring the newsletter this week.

Join My Data Engineering And Data Science Discord

Recently my Youtube channel went from 1.8k to 30k and my email newsletter has grown from 2k to well over 8k.

Hopefully we can see even more growth this year. But, until then, I have finally put together a discord server. Currently, this is mostly a soft opening.

I want to see what people end up using this server for. Based on how it is used will in turn play a role in what channels, categories and support are created in the future.

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Modernity != Value

Adopting a particular tool doesn’t mean it’s used in the intended way. You can use Census/Hightouch to write raw data into a Google Sheet or to some other Postgres database for stakeholders to manipulate themselves (read: not a great approach). Having multiple dbt repos across a 1000+ person organization will likely result in duplicate models even though there are 3 separate teams of 10 analytics engineers being paid to model data. Oh and metrics—just because a metrics layer exists doesn’t mean the metric definitions are agreed upon and communicated across the entire company.

My Journey from Data Analyst to Senior Data Engineer

My newsfeed these days is chock-full of “how to break into Data Engineering” these days. It’s made me a bit nostalgic, to say the least. I’ve been dreaming about those days gone by when I started out in the data world. I would say my experience was not so much “breaking in”, but more of a “weasel my way into” Data Engineering.

I didn’t get a Computer Science degree, not even close. I think there are many ways to get into Data Engineering, it’s probably easier than it ever has been in the past. We will fulfill our destiny in different ways, and that journey gives us a unique perspective and makes us “good” at certain things. This is my story.

Scaling Airflow – Astronomer Vs Cloud Composer Vs Managed Workflows For Apache Airflow

These are two great options when it comes to starting your first Airflow project. They help reduce a lot of issues such as scaling workloads across workers and managing all the other various components required to run Airflow at scale.

Of course, both Cloud Composer and MWAA are services provided by two of the larger cloud providers. But there is a third option called Astronomer.

In this article, I want to discuss some of the benefits and limitations of Cloud Composer and MWAA as well as discuss why Astronomer might be a better fit for many companies.

End Of Day 44

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, the modern data stack, tech, and start-ups.

If you want to learn more, then sign up today. Feel free to sign up for no cost to keep getting these newsletters.