Elevating Your Data Team: From Service Providers to Strategic Partners

It’s that time of year again.

When CEOs, directors, and data teams start looking to plan out the following year.

It’s time to start putting together your data roadmap.

But where do you start?

How do you create a data strategy roadmap that focuses on what your team should be doing?

Especially if you’re data team has found itself stuck in the data service trap. Simply providing data and dashboards when asked and never really becoming a strategic partner.

Now for some companies this is all that is needed. A service arm that provides some reports and numbers when asked. But if you’re team is either planning their current strategic data roadmap or wants to become a strategic partner, then now is the time to plan.

Over the past few weeks, I have been discussing the topic with several data leaders both on my Youtube Lives and in private, much of which has reinforced the methods I have picked up over the past decade.

Thus, I wanted to provide some tips to help those either in leadership positions or who want to break into these positions plan out their data roadmap for 2024.

Talk To People - Figure Out What They Need

In order to understand what is important to your end-users and business partners, you need to talk to people. I know, it's not everyone’s favorite thing to do in the tech world.

But when I talked with Ethan Aaron from Portable, he discussed how there wasn't a central data team when the company he had worked for got acquired. So suddenly, his new role was Head of BI. The first thing he found out as he went out and talked to teams to figure out what needed to be done was that marketing already had its own data tools, as did sales and operations. But the departments rarely shared data with each other, missing out on massive opportunities.

So one of the first major goals he realized needed to occur was centralizing the data to help allow users to have access to multiple data sets, reduce duplicate costs and work when it came to dashboards and reporting, and create a better alignment with data and the business.

All of which started with talking.

When I worked at Facebook, we’d spend time at the end of every half talking with our stakeholders to understand a few points:

What problems they were dealing with

What projects they were taking on

What projects they’d be interested in our team taking on

But we didn’t just ask questions; we also would put together a deck to cover some of our past projects as well as what we had already thought about in terms of projects we could take on.

This provided a tool for everyone to review and be inspired by. You shouldn’t just go asking for work, but also, sometimes, your stakeholders might need some help crystalizing the work they should be taking on.

In the end, this whole process should get you a clear set of pain points and projects that teams want solved.

Find The Pain Points

Once you have said conversations, you can now start figuring out which pain points and areas your team will focus on.

One thing I liked about what Ethan pointed out is that he’d recommend a data team only focus on a specific number of metrics to support, and if a company exceeds said metrics, then a similar number of metrics needs to go.

Now, I am sure this isn’t a hard and fast rule, but I agree that it’s a good idea to push the business side to actually be diligent about the metrics they choose. Yes, you want to measure everything, but you can’t respond to everything. There are only so many employees on the business side that can actually execute on the findings from your dashboards and analysis.

So, as you converse and figure out the types of projects your team should work on, also figure out if you need to stop supporting other projects. I believe this was also brought up by Bethany Lyons on the Tech Bros Podcast (the idea of limiting what you deliver).

So create a top 10-20 hit list of projects, compare it to what you’re currently supporting, and figure out what actually makes sense to deliver.

At Facebook, we actually kept a running list of the projects people asked us to do, why they wanted it, the ROI, urgency, etc.

But we’d always do our best to rationally assign everyone a reasonable amount of work. Because everyone wants you to take their project on but not every project can be taken on.

Review Your Plan And Find A Champion

Another step we’d take at Facebook is that we went through and listed all the projects and checked in to ensure we had a clear champion on the other side and that they understood which projects we planned to take on and why.

This guarantees that both the business and the champion are bought in. You shouldn’t just decide what work you believe is best to do and never review it with your stakeholders. Having them involved in the process improves trust and can help them further engage with the project.

Otherwise, you risk just building a data strategy roadmap just for the data team. Sure you’ll be working on fun projects, but not actually driving value.

That’s why it’s important to constantly communicate.

Communicate Communicate Communicate

You’ll often hear about the importance of soft skills in the tech world, and you’ll often hear that communication is one of those necessary soft skills.

But what in the world does that even mean?

Be good at communication?

Here is one place where it doesn’t have to be complicated.

As your team is going through the project, make sure you provide regular updates. You can provide weekly or bi-weekly (once every two weeks) updates via an email, meeting, or post.

At Facebook, this was easy because we basically had groups (the same way the actual Facebook app does), where I’d post my updates.

I’d post a few key points.

A quick reminder of what the project was and why we were doing it

The current status, on time, any delays, etc.

What tasks had been completed

What we were blocked on

Special thanks to those supporting the project and highlighting others’ work

This doesn’t have to be a long post. This post is for your main stakeholders (who you will likely review in person) and ensures that the rest of your company (or at least teams) know.

Although it might be nice to think that simply doing a good job will get noticed, it generally won’t in a company of thousands of employees. In turn, communicating your project’s status and its eventual impact is an important step.

But let’s take a step away from running the project and go back to planning out next year.

Realize That You Still Need To Maintain

As you’re doing all this planning and talking about new projects and value you want to deliver, consider this:

You still need to allocate some of your team's time to maintenance, one-off requests, and regular data team daily tasks. At Facebook, we found that about 20% of our time fell in these buckets.

Just because it’s exciting to build new things to polish up your resume doesn’t mean you won’t get the occasional broken Airflow DAG or ad hoc request.

Yes, your goal might not be to become a service organization. However, it’s near impossible to fend off every one-off data request. You, as the data team, are generally expected to deliver some level of support(actually a recent chat with Abby from Crowd Strike brought up the fact that having a Customer Success component to your analytics team is a must) that might not always be available in the current system. There will also be the occasional small projects that might need to get done. Your team should be prepared for that.

Of course, it might just give you more reason to ask for headcount(if that’s required).

Planning Out 2024

It’ll be next year before you know it, and if your data team has become a service organization and you want to shift it to a strategic partner, now will be the time.

You can start to talk to your stakeholders about their crucial pain points.

You can start to highlight your teams' past projects and what you believe have been the biggest wins.

You can start to get buy-ins for what you think your team can do to really drive value.

Now, I do want to add that sometimes, certain teams won’t ever be a strategic partner. Regardless of what the business says about wanting to become data driven or focused, or whatever term gets spun up in the next few years, that’s just how it is.

Some companies can operate to an extent with minimal data. Maybe all they need is 3-4 key reports, and that's it. Nothing wrong with that.

But if you work for an organization trying to make a shift, this should be a great place to start.

Data Engineering And Machine Learning Summit 2023!

The Seattle Data Guy and Data Engineering Things are coming together to host the first Data Engineering And Machine Learning Summit on October 25th and 26th.

The purpose of this summit is to focus on the data practitioners who are actually doing the work at companies. Solving real problems with real solutions.

For example, here are just a few of the talks we have planned!

Sudhir Mallem - Centralized ETL framework for Decentralized teams at Uber

Jessica Iriarte - Optimizing oilfield operations using time-series sensor data

Kasia Rachuta - Overcoming challenges and pitfalls of A/B testing

Siegfried Eckstedt - Test-Driven Development for Your Data, Models and Pipelines

👩🏻💻 Mikiko Bazeley 👘 Bazeley - MLOps Beyond LLMs

Joe Reis 🤓 - WTF Is Data Modeling

You’re definitely not going to want to miss this event!

Also I want to give a very special thanks to our sponsors Decube and Onehouse! Their support is ensuring we can make this conference even bigger and better.

The Product Development Life-Cycle For Dashboards - With Abby Kai Liu

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

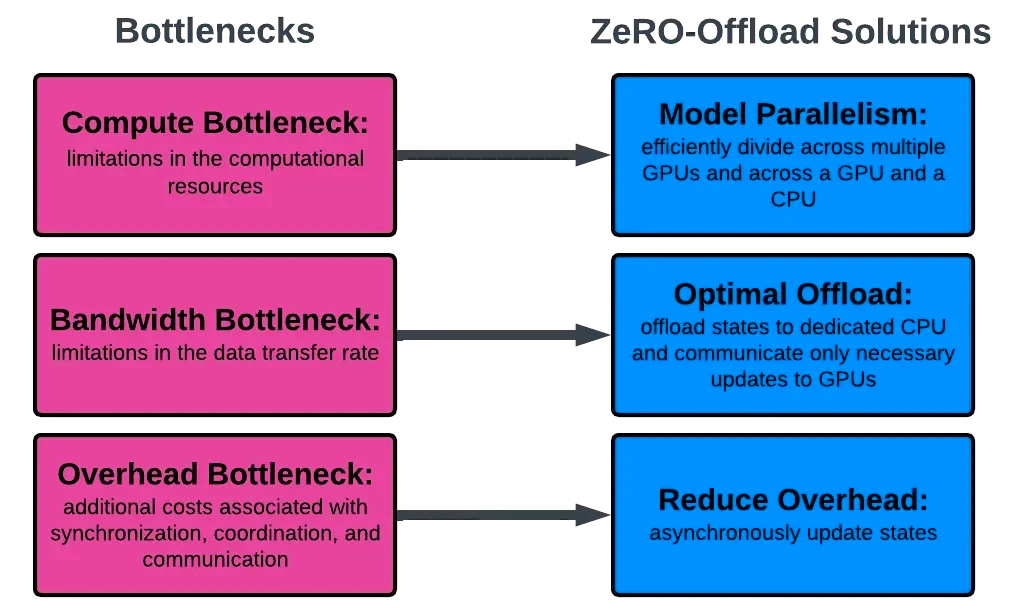

Performance bottlenecks in deploying LLMs—a primer for ML researchers

With the rise of Large Language Models (LLMs) come new challenges that must be addressed, especially when it comes to deploying these models at scale. Unlike traditional convolutional neural networks (CNNs) or recurrent neural networks (RNNs), LLMs require an enormous amount of computational power, memory, and data to train effectively. Learning to reason about systems-level design choices to accelerate the speed and efficiency of training is therefore critical for an ML researcher entering this space.

In this post, we explore problems involved in LLM deployment, from GPU shortages to bottlenecks in model performance. These problems have inspired recent developments in distributed training frameworks commonly used to train LLMs, notably ZeRO-Offload. Here we give an overview of ZeRO-Offload, and in future posts we describe its benefits in depth.

Mistakes I Have Seen When Data Teams Deploy Airflow

Airflow remains a popular choice when it comes to open-source orchestration tools. When I surveyed people about a year ago now, it was the most popular open-source solution, and still to this day, my video on “Should You Use Airflow” drives a lot of prospect conversations.

End Of Day 97

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

I empathize with the data team that’s spending 30% of their time in 2024 migrating to Python3