Working for data teams unavoidably creates horror stories that crystalize themselves in the brains of those who have experienced them.

Dropping production tables.

Terrible bosses who don’t understand anything about data.

Fragile infrastructure that is just asking for something to break.

Even people I talked to in the space who have only been working for a few years have run into their fair share of challenging situations in the data field. Over the last few weeks I have asked people to share their horror stories. Times when data went wrong or just working in the data industry was difficult. Maybe it made them want to quit or leave. I have left most of them anonymous as this article isn’t meant to call anyone out.

In this article you will read several of these data horror stories and hopefully they don’t bring too many flashbacks.

Migration Led To Different Data Sets And The New Data Set Was Right?

Migrating data systems and infrastructure is a standard project many data engineers face. As teams switch from one system to another it is also standard to run comparisons to make sure the data from both systems match.

Usually, this leads to some discrepancies in the new data set that require some tweaking of logic to make sure they match the old data set. Since the old data set should be right..right?

In this case, this valiant data engineer rebuilt the backend of a government system where the data was provided by a third party. So far so good.

As they put it.

Turned it around quickly (Dremio was immense), and months down the line data validity was questioned after it was compared to the old system and the original text. - Anonymous Linkediner

The data didn’t match.

But upon deeper analysis, they discovered that the old data…was never accurate, to begin with(and the new data was). Now that’s scary. They didn’t tell me what the data was. But, I do hope no major decisions were made.

Their final point:

If ever a project demonstrated a need for data validation inline, using SodaSQL for example, this was it. - Anonymous Linkediner

Data Works Even When It’s Wrong

Successful engineering is all about understanding how things break or fail.

Henry Petroski

The scary thing about data pipelines is they can function perfectly fine, even when the data coming out of them is no longer accurate. Sometimes the data may seem to be behaving normally at first. Then a couple of months or days later a problem arises that you did not foresee(I have seen some interesting failures on leap years).

Gleb Ex-Lyft data engineer and founder of Datafold commented on my post with just that situation. One where he had to fix an error and just caused a new silent data issue.

He assumed he had fixed the problem because, hey, the code ran and it seemed to make sense. It doesn’t help that many data pipeline systems don’t have data quality checks as part of their CI/CD process and just check to make sure the pipeline pushes data through. Airflow said the pipeline was successful, so it was successful right?

Suddenly you start getting pinged by managers and downstream users that your data looks weird. To the war room and hopefully, you can find the one-off error quickly!

You’re stressed, you’re unsure what went wrong and you’re praying you can fix the problem fast.

In the end, you have so much trauma from the event you build an entire company around it.

Not Great Bosses

If you have worked in any job, data or not, you’ve probably had bosses that weren’t great.

This can take on many forms. It can be a boss that steals your ideas or one with unrealistic requests. I mean, when I worked at one restaurant I had a chef ask why they were even paying me the day after they finally started paying me.

But there is another kind of difficult situation to be in.

Having a boss that doesn’t know how to drive initiatives forward or stick up for good data practices as a head of data. Instead many of the more granular data infrastructure decisions are being made by the CTO. This has led to the development of a system that relies almost 100% on Google Sheets as their data storage system, a lack of staging and raw layers making it difficult for anyone to know how data went from raw to transformed, and other decisions that are making it difficult for this engineer to perform their job.

This would make anyone want to likely leave.

In the end, there is always the classic pushing code to production in terms of horror stories.

Dropping A Production Table On Friday

Need I say more than the header? It’s a common practice to avoid touching production systems on Fridays. In fact, on Facebook, if you tried to push code passed around 2 PM on a Friday a cute little image of a fox would show up in an ominous red box and the text read something like

“Are you sure you want to push code right now? Will you be around on Saturday if this blows up?”

Well, there is a good reason for this as one solutions architect found out when by mistake they wiped out a calculated payments table from the production environment.

On Friday after 5 PM. At a bank.

It took 4-5 engineers and DBAs the rest of the weekend to try to figure out how they would restore the data. They had to go through back-ups, and attempt to re-calculate the table all of which took several hours per attempt.

All while the bank's production payment table did not exist.

We have all made honest mistakes and I have heard plenty of similar stories where someone ran a drop script on production when they thought they were in the test.

In some cases, the data was never recovered.

The Trauma Continues

There are a lot of challenges and horror stories people will go through as they progress on their data journey. Even if you work at a place with great data infrastructure, perhaps you have a boss that doesn’t support your work or maybe the data quality has always been bad and everyone just deals with it.

That shouldn’t scare anyone. There are plenty of great data teams and smart people out there.

So good luck and feel free to share some of your own horror stories in the comments below!

Video Of The Week: Why You Shouldn't Become A Data Engineer - Picking The Right Data Career

Join My Data Engineering And Data Science Discord

Recently my Youtube channel went from 1.8k to 37k and my email newsletter has grown from 2k to well over 15k.

Hopefully we can see even more growth this year. But, until then, I have finally put together a discord server. Currently, this is mostly a soft opening.

I want to see what people end up using this server for. Based on how it is used will in turn play a role in what channels, categories and support are created in the future.

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

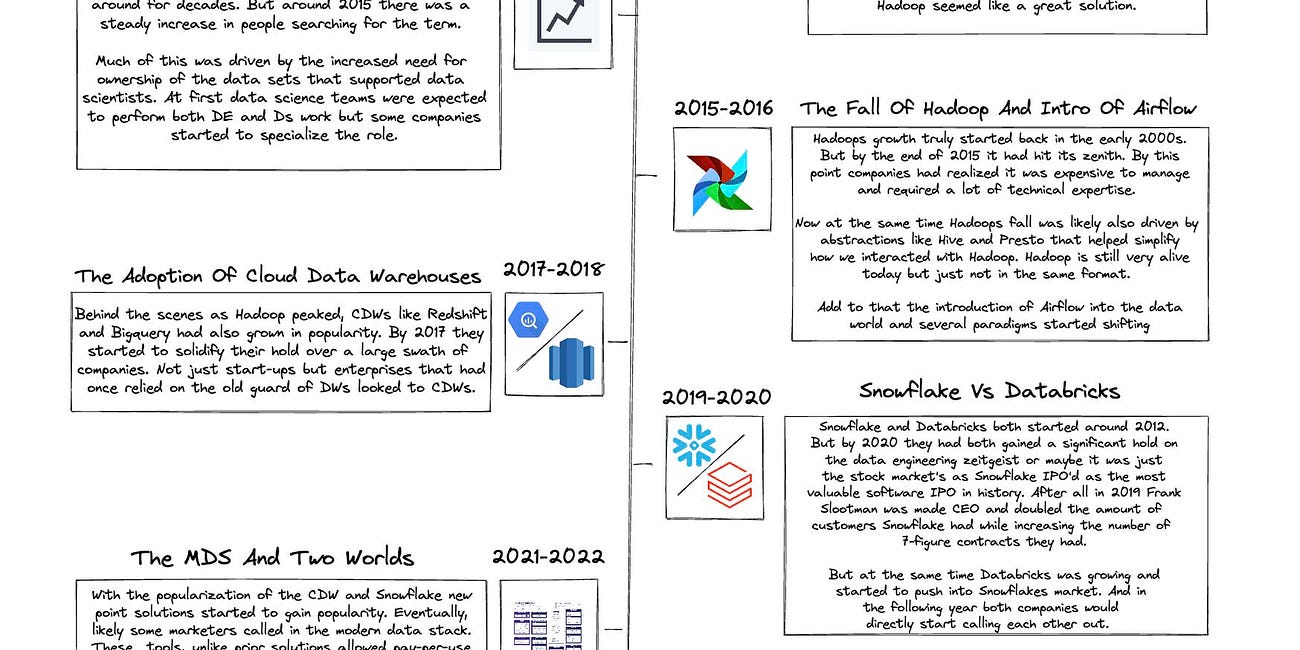

A Decade In Data Engineering - What Has Changed?

The concepts and skills data engineers need to know have been around for decades. However, the role itself has really only been around for a little over 10 years, with companies like Facebook, Netflix and Google leading the charge. Throughout those ten years, there were significant breakthroughs and tools through hype, and general acceptance became standard (if even only for a few years). Each of these tools and practices shifted how we as data engineers operate.

Data Mesh — A Data Movement and Processing Platform @ Netflix

Realtime processing technologies (A.K.A stream processing) is one of the key factors that enable Netflix to maintain its leading position in the competition of entertaining our users. Our previous generation of streaming pipeline solution Keystone has a proven track record of serving multiple of our key business needs. However, as we expand our offerings and try out new ideas, there’s a growing need to unlock other emerging use cases that were not yet covered by Keystone. After evaluating the options, the team has decided to create Data Mesh as our next generation data pipeline solution.

Last year we wrote a blog post about how Data Mesh helped our Studio team enable data movement use cases. A year has passed, Data Mesh has reached its first major milestone and its scope keeps increasing. As a growing number of use cases on board to it, we have a lot more to share. We will deliver a series of articles that cover different aspects of Data Mesh and what we have learned from our journey. This article gives an overview of the system. The following ones will dive deeper into different aspects of it.

Visualizing Baseball Statistics with Apache Pinot and Redash

Analytics databases and data visualization are like hand in glove, adding tremendous value when used together. SQL queries return numbers, whereas visualizations make those numbers easy to digest at first glance, confirming the proverbial picture speaks a thousand words.

Apache Pinot, a real-time OLAP database, works with numerous BI tools to produce beautiful visualizations, including Apache Superset and Tableau. This article explores how Pinot can be integrated with Redash, another popular open-source tool for data visualization and BI.

3 Tips for Implementing a Future-Ready Data Security Platform

As data storage and analysis continue to migrate from on-premises to the cloud, the market for cloud data security platforms has expanded in response. In fact, the global cloud security market is projected to grow from $29.3 billion in 2021 to $106 billion by 2029. This highlights a north star for the future of successful data use: adequately protecting your organization’s sensitive data is imperative.

The number of pertinent data security platforms is growing, and it’s important that your organization’s data team evaluates which solution will be best for its needs. In this blog, we examine the insights from Gartner’s Innovation Insight for Data Security Platforms report and take a look at some best practices for choosing the right DSP for your organization.

End Of Day 49

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, the modern data infrastructure, tech, and start-ups.

If you want to learn more, then sign up today. Feel free to sign up for no cost to keep getting these newsletters.

I help to co-organize an event around people sharing their data horror stories that I think you might like — check out datamishapsnight.com :)