Becoming a Data Engineering Force Multiplier

By Daniel Beach author of Data Engineering Central

As the landscape becomes increasingly data-driven, fueled by the rise of ML and AI, the importance of data engineering as a function continues to climb steadily year after year. Organizations now rely heavily on data to make every decision; data has become a requirement across every business unit, with the desire for more never stopping.

However, collecting, processing, and analyzing data is never clean, clear, or easy. That's where data engineering comes in. Data engineers have become the backbone for building and maintaining the infrastructure and tools necessary to collect, store, and process the increasing size and velocity of data being produced every second. There has never been a better time to become a data engineer or take your data engineering career to the next level.

In this blog post, we'll explore how you can become a data engineering force multiplier and significantly impact your organization's data-driven initiatives.

We will start by asserting what it means to be a data engineering force multiplier, and then unpack each one. Also, the list will be split into two sub-lists: technical and non-technical.

Technical

Architecture and technology understanding

Above-average programming skills

Good understanding of CI/CD and automation

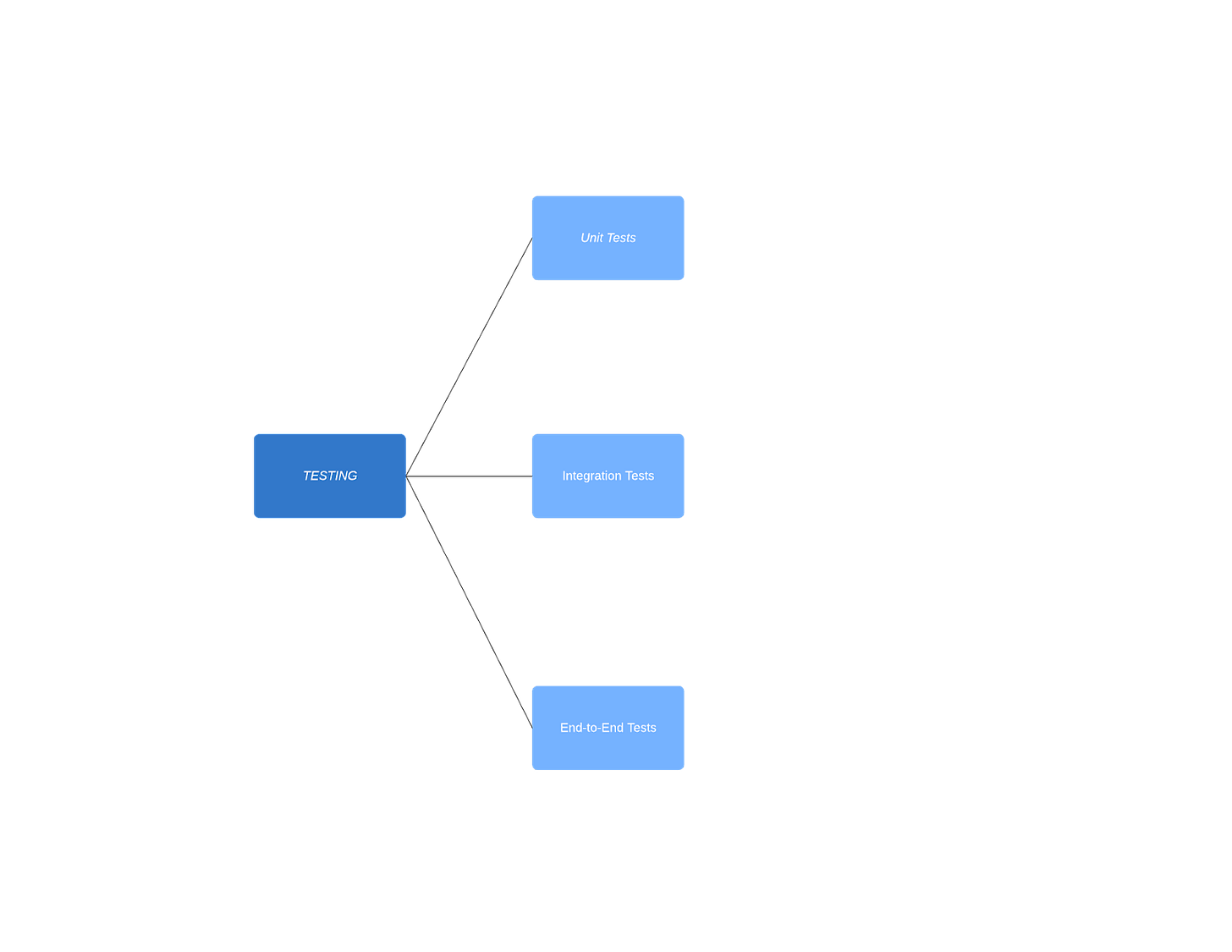

Good understanding of testing (unit and integration)

Non-Technical

Able to teach and upskill others

Long-term project planning and execution

Communication skills inside/outside engineering

Technical Engineering Skills - Force Multipliers

Let’s first dive into the technical aspects of becoming a data engineering force multiplier. Although we can all agree that it takes a lot more than good technical skills to become a senior engineer, it’s pretty obvious that we must be able to perform at high levels and show competence in multiple areas:

Architecture

Data Modeling

Coding/Programming

Testing

CI/CD

Architecture

A well-known topic close to the heart of data teams is architecture. With the size and scale of data today, being able to have data systems that can handle large data sets is critical to the usability and reliability of data pipelines. Often, this skill sets apart a data engineering force multiplier from a more junior engineer for the following reasons:

Good architecture requires context and knowledge about constraints, data types and volumes, and other technology currently in use.

Understanding what combination of tools and features can implement robust and scalable data processing systems.

How data architecture can support the organization's data needs and ensure data quality and security.

Understanding bottlenecks, tradeoffs, and breakpoints at a high level, and being able to verbalize and foresee problem areas.

The skill of architecture related to being a data engineering force multiplier has to do with the bigger picture; being able to lift your head above the weeds, the favorite tools, and ask the correct questions. The ability to question everything, dive in, and do research, yet be able to pull back and see how the details fit into the big picture, can be a rare skill that takes time to cultivate.

Looking for some next steps on how to become a better architect in the Data Engineering space? Here are some resources:

Fundamentals of Data Engineering: Plan and Build Robust Data Systems book by Joe Reis and Matt Housley.

Designing Data-Intensive Applications book by Martin Kleppmann.

Never underestimate the skill of being a good architect as a data engineer. Being able to design data systems and understand real-world tradeoffs is a valuable and unique skill.

Data Modeling

Data modeling is one of those popular topics that everyone likes to talk about, but it also has a myriad of approaches that depend a lot on the technical context. The process of creating a conceptual representation of the data structure and relationships between data entities in a system is a core technical skill for any data engineer. Being a great data modeler is another must-have skill to be a data engineering force multiplier.

Why?

Because incorrect or inefficient data models will destroy or lift otherwise good or bad data stacks.

Data modeling is essential for designing efficient data storage and retrieval systems, but it also relies heavily on the context of the underlying technology. For example, Delta Lake vs. Postgres. A data engineer must be skilled in data modeling techniques to design a database schema that supports the data requirements and business rules of the organization.

Data modeling requires fundamental knowledge of data structures and tradeoffs.

Data modeling requires knowledge of data access patterns, which requires research.

Knowing the difference a data model makes for analytics vs. transactional needs is core.

Data modeling is half science and half art; experience plays a big role.

Interested in getting a greater understanding of data modeling? There is a critical lack of modern material on data modeling, but here are some resources to consider:

The Data Warehouse Toolkit by Ralph Kimball.

Grokking Algorithms by Aditya Bhargava.

To be a data engineering force multiplier, a good data engineer must be able to understand data access patterns, usage cases, requirements, and technology. Putting all this knowledge together and producing an efficient and usable data model that can scale is what can set someone apart.

Coding/Programming

Although this can be a sensitive topic in the era of LeetCode interviews and backlash, it’s still true that being a data engineering force multiplier would be difficult without being above average at writing code.

Even in the No-Code and Low-Code marketing age, it’s clear there is still a major place for efficient programming skills in data engineering.

Data engineers must be proficient in programming languages such as Python, Java, Go, Rust, SQL, etc. So many data transformations rely heavily on custom code and transformations, knowledge of best practices, and good programming skills to lift an entire team.

Writing clean, simple, and performant code is the sign of a senior engineer.

Understanding basic OOP, Function, Imperative, and other approaches, their tradeoffs, and their uses is an important skill.

Showing other engineers the value of good code, and how to write it, is a game changer that makes everyone’s life easier.

Data engineers use coding daily to build ETL (Extract, Transform, Load) pipelines, integrate various data sources, perform data cleaning and transformation, and build custom data solutions for the organization. Understanding basic SWE concepts and applying them to a data stack can have an outsized positive impact.

Want to get better at the art of programming? Here are a few resources:

The Pragmatic Programmer by David Thomas and Andrew Hunt.

Python 101 by Michael Driscoll.

Clean Code: A Handbook of Agile Software Craftsmanship by Robert C. Martin.

A Data engineer who is also an excellent programmer is a rare find. Learning your craft well and having a passion and desire to write clean, performant code will make you a data engineering force multiplier on your team and codebase. It will not go unnoticed.

Testing

The topic of testing, sadly, has been one to slowly become a mainstream topic in data engineering. To this day, many, or the majority of data teams, are still greatly lacking even the basic ability to unit test their code. Few things will slow down and make the development process more dangerous, stressful, and error-prone than a data stack with testing.

Testing is crucial for ensuring data engineering pipelines and systems are working properly. Data engineers must perform various types of testing, including unit testing, to ensure data quality, accuracy, and completeness and avoid pushing human error and obvious bugs to production.

The ability to run unit tests on a data repo will increase developer performance.

Writing code that can be unit-tested will make for much better code and readability.

Debugging and finding errors will become less complex and take less time.

Being the engineer that pushes for testing will increase your visibility and perception in others.

The ability to run end-to-end integration tests on a data platform is next-level data engineering.

A data engineering force multiplier will think about testing from the beginning. Both unit and integration tests can transform teams from constantly breaking and fixing, to efficient problem solvers who are always adding new features with confidence.

CI/CD

Probably one of the most under-utilized pieces of good software engineering best practices in the data engineering space is the concept of CI/CD. Good practices around automation have an outsized effect on many data teams because data teams can many times be under-sized, overworked, and lack cloud engineering support.

Continuous Integration and Continuous Deployment (CI/CD) is a process of automating the testing and deployment of code changes. A data engineer must be familiar with CI/CD tools and techniques to automate the testing and deployment of data pipelines, helping to ensure that the data is accurate, up-to-date, and accessible to the organization in a timely manner.

CI/CD in a data engineering context can help teams do more with fewer resources.

CI/CD-driven data workflows typically tend to ensure better quality code and pipelines.

CI/CD implemented well will reduce the number of bugs and human errors that make it into production.

The best part about a data engineer who can implement CI/CD is that they save the team and company time and money. Automating many process-related testing and deployment reduces bugs and frees teams to function at a higher and more productive level. Additionally, these engineers become well-versed in areas like configuration, git control, workflows, deployments, and other complex topics that only expand their expertise and experience.

Technical Engineering Skills - Force Multipliers

Along with all the technical expertise listed above, it’s well known that technical skills themselves don’t make senior engineers perform and deliver at high levels. Many would argue that, in fact, it’s the non-technical skills that set a data engineering force multiplier apart from the larger group.

Let’s discuss some of these important non-technical skills:

Able to teach and upskill others

Long-term project planning and execution

Communication skills inside/outside engineering

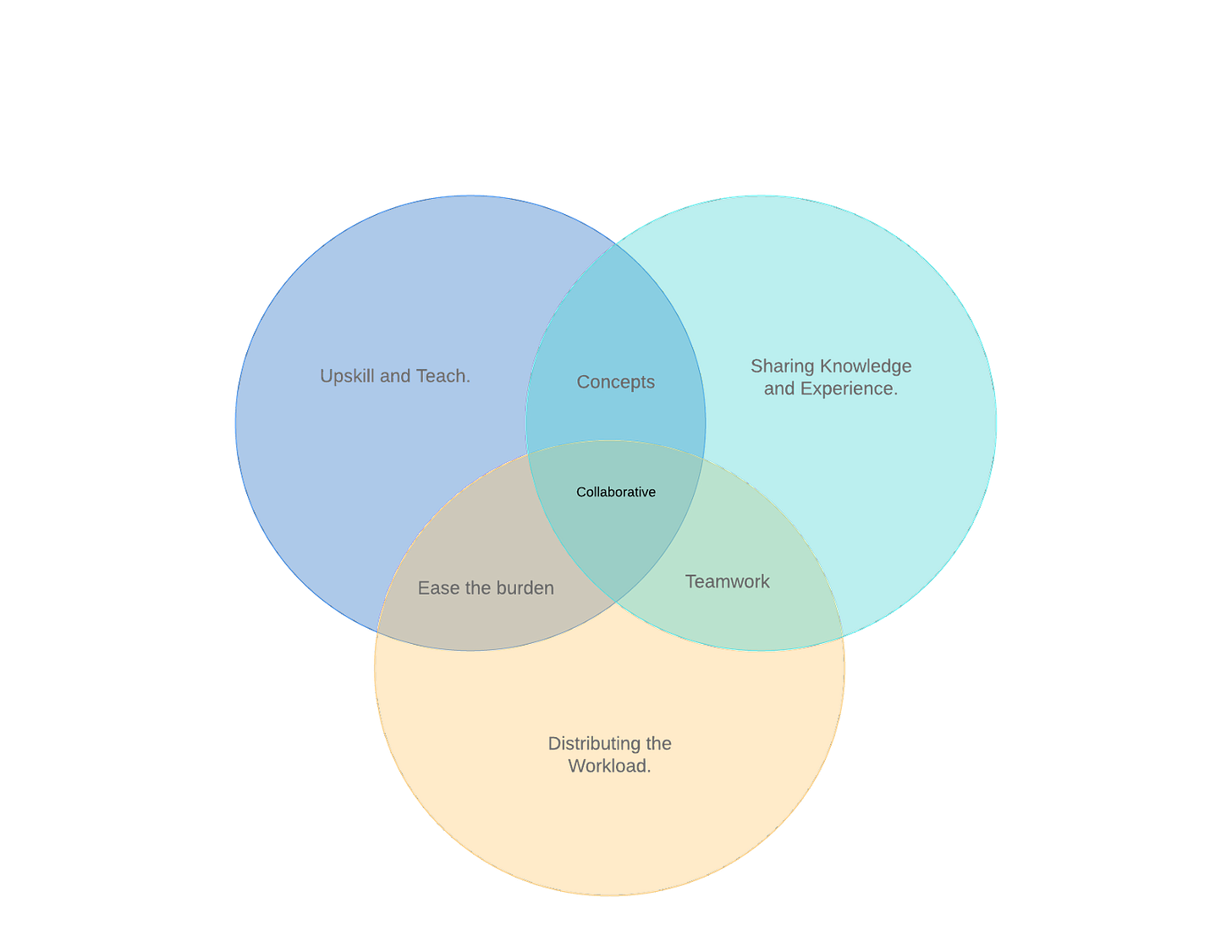

Able to Teach and Upskill Others

Probably number one on the list of skills that makes a Data Engineer a force multiplier is the ability to upskill and teach more of the data teams’ junior members. It’s been known for some time that simply having a single “A-team” member who performs at a high level is helpful, but over the long term it’s not scalable.

People leave companies and jobs, and having all knowledge and skill exercised by a single person introduces a single failure and breakpoint. It’s much better to have a team of skilled workers who can function as a team to produce the best results.

Deepening understanding across the team

Sharing knowledge and experience

Distributing the workload

Deepening Understanding

When data engineers teach others, they have to articulate and explain concepts and technologies, which requires a deeper understanding of the subject matter. This process helps data engineers to clarify their understanding and identify areas where they need to improve their knowledge.

The process of understanding helps junior engineers pick up critical thinking skills

The team as a whole can solve more complex problems more quickly

Better-designed and more performant data pipelines are a result

Sharing knowledge

Data engineering is a collaborative field, and sharing knowledge and expertise with others is essential for success. When data engineers teach and upskill others, they become better collaborators and more effective team members. Many times, senior engineers have the tendency to hoard knowledge, depending on the work and culture environment they have experienced. This leads to breakdowns and problems across a data organization.

Certain problems require “the expert” to solve them

This knowledge moves a junior engineer to a senior level

Collaboration becomes a commonplace activity

Teams become stronger and work together better

It’s less likely that certain persons get “stuck.”

A data engineering force multiplier lifts an entire team and department by simply sharing their knowledge. Everyone produces better work and can emulate and grow professionally due to a single senior engineer who can freely and openly share their experience and knowledge.

Distributing the Workload

Teaching and upskilling others also allows data engineers to learn from their students. Students may bring new perspectives, questions, and challenges that can help senior data engineers think about problems in new ways and learn from their experiences. In turn, this allows the workloads to be placed with different persons, keeping senior engineers from getting burned out and junior engineers from growing and learning.

Burnout will happen if only one person is doing all the “hard” work.

Other engineers will not grow if they are not given the chance to work on hard problems.

More work will get done in the long term.

More engineers will be able to work on a variety of problems.

A senior engineer who can lift an entire team by allowing more junior engineers to work on difficult problems, and coach them through those experiences, will enable long-term benefits for data teams. This is positive for everyone involved. Junior engineers will grow their skills more quickly, and senior engineers will feel less pressure to “take all the hard and complex tasks.”

Long-Term Planning and Project Management

Long-term planning and project management are critical aspects of data engineering because they help ensure the successful implementation and operation of data infrastructure and systems. It’s one thing to write good code or tests and plow through the endless list of JIRA tasks; it’s an entirely different skill to step back, look at the big picture and company goals, and visualize and execute against large, long-term projects.

This plays out in several areas:

Resource allocation

Time allocation

Balance many competing priorities

Making hard decisions

Breaking big projects down into smaller-sized tasks

The ability of a data engineering force multiplier comes from the fact that they can not only execute individual tasks, but they can see the bigger picture and long-term goals. The ability to map a single large data migration down into several projects, with their interdependencies, and even farther into weekly tasks and subtasks, is a skill that is learned with time and experience.

Knowing where, when, and how to make tradeoffs given the technology, resource, and time constraints, takes an ability to back away from the small daily tasks of writing code and view from a larger window that includes other groups, projects, and constraints.

Many data engineers will try to stay out of these “product” driven decisions and projects, but that is not a good long-term plan. Force multipliers can be trusted with large projects, and they can execute their technical and communication skills to accurately break down those projects into manageable chunks of work.

This leads nicely into the last non-technical skill of communication.

Communication skills inside/outside Engineering

Last but not least, we come to the most dreaded of engineering topics: communication. It’s not uncommon to find that many engineers feel themselves to be introverted, and therefore not well suited to “speaking” or leading. However, this sort of feeling and approach construes the idea of communication to simply giving a presentation. We are talking about something more personal than giving a presentation to a group filled with stakeholders, although that is an important skill.

data engineering force multipliers find that communication in the following areas and ways enable data teams to be more effective:

Improve communication within engineering, bringing people and teams together

Resolving technical conflicts

Translating product needs back to engineering

Sharing technical challenges of engineering back to product

The ability to communicate complex topics and ideas in an approachable manner

Making sure engineering has a voice that is heard

Clearly, instead of thinking of communication as giving presentations, senior engineers can express ideas and constraints cohesively and coherently. This simply comes from practice and is not scary or intimidating at all; it’s simply being fully informed and ready to express ideas and opinions in a way that others can receive and understand.

Often, this simply takes the form of being the “go-between” engineering and other groups in the business, along with being someone who can “keep the peace” and help mediate charged discussions between engineers, or different engineering teams. This can help bring about an agreement or concession.

The data engineering force multiplier can step back from emotions, examine all ideas based on their merits, and communicate those findings clearly and concisely to technical and non-technical audiences.

Get Access To Apache Iceberg's Chapter One For Free

Apache Iceberg provides the capabilities, performance, scalability, and savings that fulfill the promise of an open data lakehouse. By following the lessons in this book, you’ll be able to achieve interactive, batch, machine learning, and streaming analytics all without duplicating data into many different proprietary systems and formats. Authors Tomer Shiran, Jason Hughes, Alex Merced, and Dipankar Mazumdar from Dremio guide you how to achieve this.

Articles Worth Reading

There are 20,000 new articles posted on Medium daily and that’s just Medium! I have spent a lot of time sifting through some of these articles as well as TechCrunch and companies tech blog and wanted to share some of my favorites!

Data Modeling Where Theory Meets Reality

Data modeling varies at different companies. Even companies that all say they are using dimensional modeling or at least use terms like facts and dims might differ in the way they implement it. Over the past near decade, I have worked for and with different companies that have used various methods to capture this data. I wanted to review some of the techn…

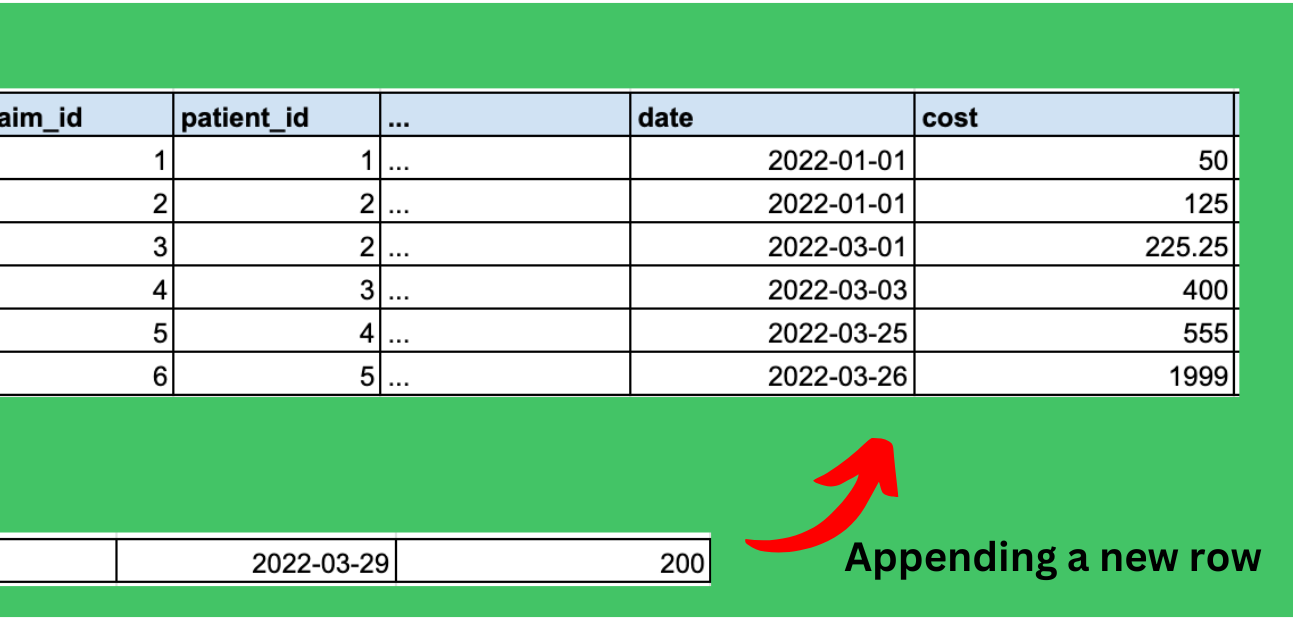

I Asked ChatGPT to Build a Data Pipeline, and Then I Ran It

There are several articles online about “I asked ChatGPT to <fill in the blank> Ranging from code creation to side hustles. Since my work primarily focuses on code creation and data engineering, I wanted to see if ChatGPT could build a data pipeline from scratch.

So for this article, I asked ChatGPT (GPT-4 specifically) to create a pipeline using some fake data I made, with the end goal being an aggregated table in Databricks to be used as a monthly sales report.

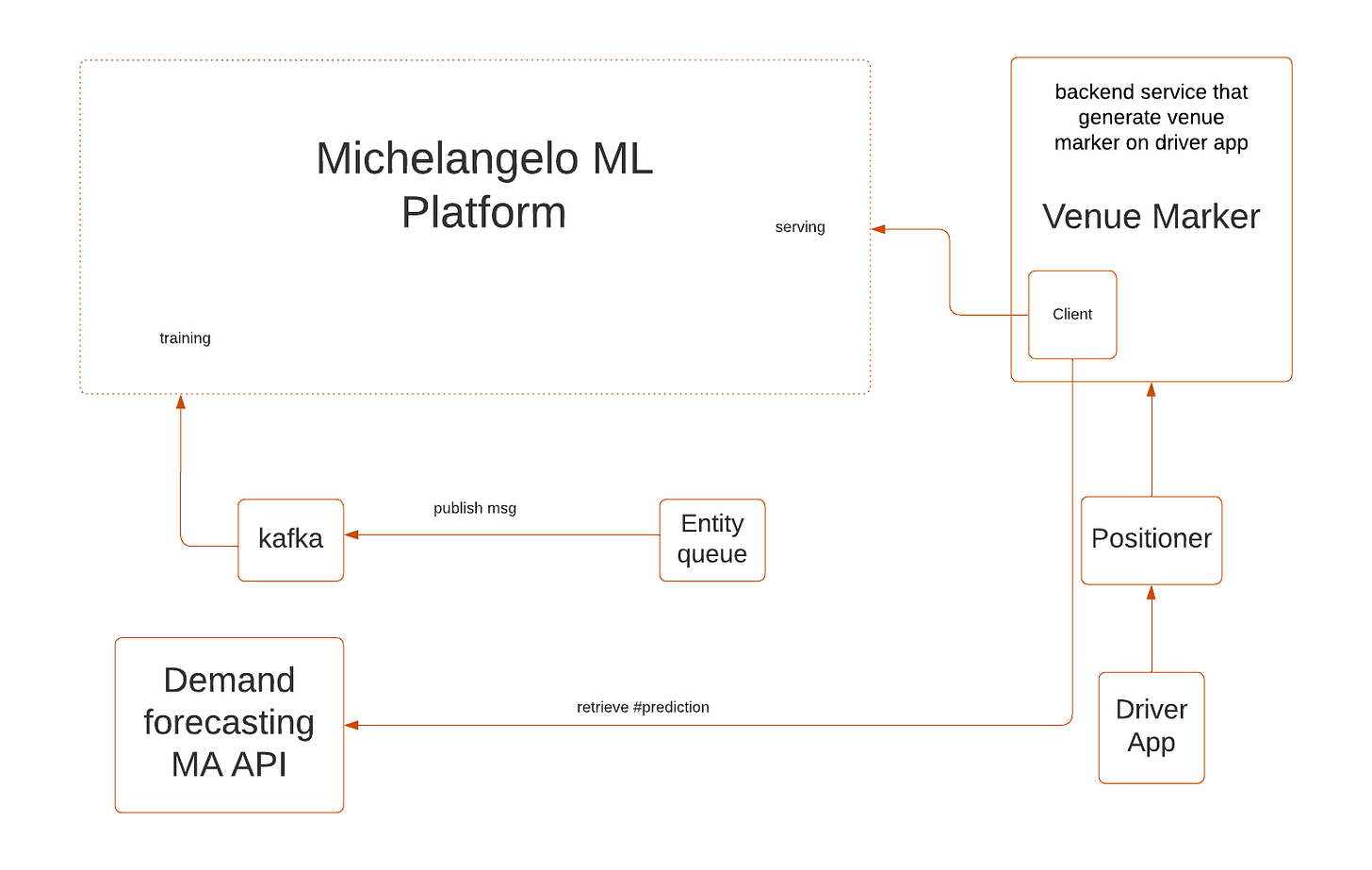

Demand and ETR Forecasting at Airports

Airports currently hold a significant portion of Uber’s supply and open supply hours (i.e., supply that is not utilized, but open for dispatch) across the globe. At most airports, drivers are obligated to join a “first-in-first-out” (FIFO) queue from which they are dispatched. When the demand for trips is high relative to the supply of drivers in the queue (“undersupply”), this queue moves quickly and wait times for drivers can be quite low. However, when demand is low relative to the amount of available supply (“oversupply”), the queue moves slowly and wait times can be very high. Undersupply creates a poor experience for riders, as they are less likely to get a suitable ride. On the other hand, oversupply creates a poor experience for drivers as they are spending more time waiting for each ride and less time driving. What’s more, drivers don’t currently have a way to see when airports are under- or over-supplied, which perpetuates this problem.

End Of Day 80

Thanks for checking out our community. We put out 3-4 Newsletters a week discussing data, tech, and start-ups.

I particularly liked the part about communication.

We data engineers love technicalities. Yet, only somebody with great writing and communication skills can create such a masterpiece.

Up-skilling others is a great thing to do, you bring others up and you'll learn from it amplifying the effect of your entire team.